Simulator-based reinforcement learning for data center cooling optimization

- We’re sharing more about the role that reinforcement learning plays in helping us optimize our data centers’ environmental controls.

- Our reinforcement learning-based approach has helped us reduce energy consumption and water usage across various weather conditions in our data centers.

- Meta is revamping its new data center design to optimize for artificial intelligence and the same methodology will be applicable for future data center optimizations as well.

Efficiency is one of the key components of Meta’s approach to designing, building, and operating sustainable data centers. Besides the IT load, cooling is the primary consumer of energy and water in the data center environment. Improving the cooling efficiency helps reduce our energy use, water use, and greenhouse gas (GHG) emissions and also helps address one of the greatest challenges of all – climate change.

Most of Meta’s existing data centers use outdoor air and evaporative cooling systems to maintain environmental conditions within the envelope of temperature between 65°F and 85°F (18°C and 30°C) and relative humidity between 13 and 80%. As water and energy are consumed in the conditioning of this air, optimizing the amount of supply airflow that has to be conditioned is a high priority in terms of improving operational efficiency.

Since 2021, we have been leveraging AI to optimize the amount of airflow supply into data centers for cooling purposes. Using simulator-based reinforcement learning, we have, on average, reduced the supply fan energy consumption at one of the pilot regions by 20% and water usage by 4% across various weather conditions.

Previously, we shared how a physics-based thermal simulator helps us optimize our data centers’ environmental controls. Now, we will shed more light on the role of reinforcement learning in the solution. As Meta is revamping its new data center design to optimize for artificial intelligence, the same methodology will be applicable for future data center optimizations as well to improve operational efficiency.

Currently, Meta’s data centers adopt a two-tiered penthouse design that utilizes 100% outside air for cooling. As shown in Figure 1, the air enters the facility through louvers on the second-floor “penthouse,” with modulating dampers regulating the volume of outside air. The air passes through a mixing room, where outdoor air, if too cold, can be mixed with heat from server exhaust when needed to regulate the temperature.

The air then passes through a series of air filters and a misting chamber where the evaporative cooling and humidification (ECH) system is used to further control the temperature and humidity. The air continues through a fan wall that pushes the air through openings in the floor that serve as an air shaft leading into the server area on the first floor. The hot air coming out from the server exhaust will be contained in the hot aisle, through exhaust shafts, and eventually released out of the building with the help of relief fans.

Water is mainly used in two ways: evaporative cooling and humidification. The evaporative cooling system converts water into vapor to lower the temperature when the outside air is too hot, while the humidification process maintains the humidity level if the air is too dry. As a result of this design, we believe Meta’s data centers are among the most advanced, energy and water efficient data centers in the world.

In order to supply air within the defined operating envelope, the penthouse relies on the building management system (BMS) to monitor and control different components of the mechanical system. This system performs the task of conditioning the intake air from outside by mixing, humidifying/dehumidifying, evaporative cooling, or a combination of these operations.

There are three major control loops responsible for adjusting setpoints for supply air: temperature, humidity, and airflow. The airflow setpoint is typically calculated based on a small set of input variables like current IT load, cold aisle temperature, and differential pressure between the cold aisle and hot aisle. The logic is often very simple at a linear scale, but becomes very difficult to accurately model as these values at different locations in the data center are coupled to one another and highly dependent on complex local boundary conditions. However, the amount of airflow will largely dictate the energy used by the supply fan arrays and water consumption when cooling or humidification is required. Therefore, optimizing the airflow setpoint would have the greatest impact in regards to further improving the cooling efficiency given the fact that the temperature and humidity boundary of the operating envelope is fixed.

Reinforcement learning (RL) is good at modeling control systems as sequential state machines. It functions as a software agent that determines what action to take at each state based on some transition model – which leads to a different state – and constantly gets feedback from the environment in terms of reward. In the end, the agent learns the best policy model (typically parameterized by a deep neural network) to achieve the optimal accumulated reward. The data center cooling control can be naturally modeled under this paradigm.

At any given time, the state of a data center can be represented by a set of environmental variables monitored by many different sensors for outside air, supply air, cold aisle and hot aisle, plus IT load (i.e., power consumption by servers), etc. The action is to control setpoints – for example, the supply airflow setpoint that determines how fast the supply fans run to meet the demand. The policy is the function mapping from the state space to action space (i.e., determining the appropriate airflow setpoint based on current state conditions). Now the task is to leverage historical data we have collected from thousands of sensors in our data centers – augmented with simulated data of potential, but not yet experienced conditions – and train a better policy model that gives us better reward in terms of energy or water usage efficiency.

The idea of using AI for data center cooling optimization is not new. There are also various RL approaches reported such as, transforming cooling optimization via deep reinforcement learning and data center cooling using model-predictive control.

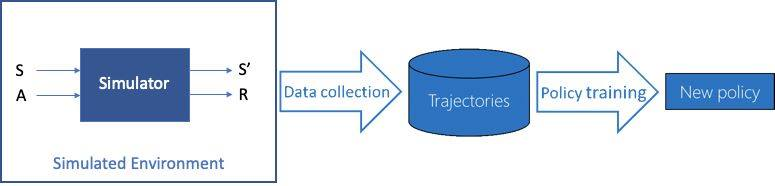

However, applying the control policy determined by an online RL model may result in various risks including breaches of service requirements and even thermal unsafety. To address this challenge, we adopted an offline simulator based RL approach. As illustrated in Figure 2, our RL agent operates in a simulated environment by starting from real-life historical observations, S. It then explores the action space, feeding into the simulator to predict the anticipated new state S’ and reward, R, given each sampled action, A. From there it collects the pairs (S, A) that have the best reward to form a new training data set to update the parameterized policy model.

Our simulator is a physics-based model of building energy use that takes as inputs time series such as weather data, IT load, and setpoint schedules. The model is built with data center building parameters, including geometry, construction materials, HVAC, system configurations, component efficiencies, and control strategies. It uses differential equations to output the dynamic system response, such as the thermal load and resulting energy use, along with related metrics like cold aisle temperature and differential pressure profiles.

The simulator plays a very important role here since our goal is to optimize energy and water usage while keeping the data center condition under specs so hardware performance isn’t affected. More specifically, we want to keep the rise in cold aisle temperature below a certain threshold, or a positive pressurization from cold aisle to hot aisle, to minimize the parasitic heat caused by recirculation.

Additionally, the physics-based simulator enables us to train the RL model with all possible scenarios, not only those present in the historical data. This increases reliability during outlier events and allows for rapid deployment in newly commissioned data centers.

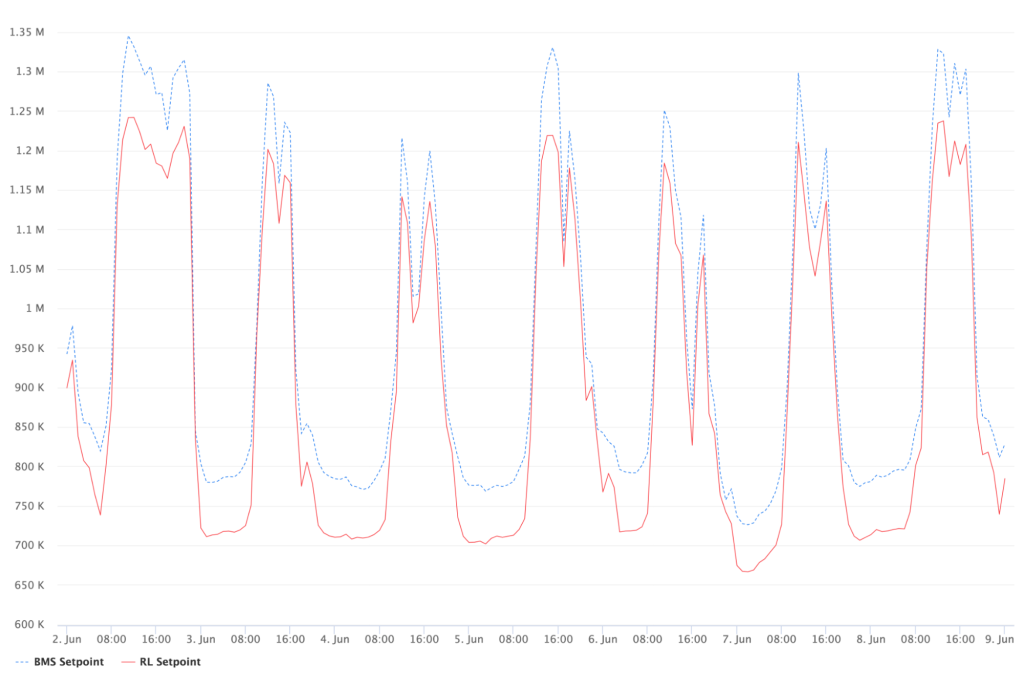

In 2021, we started a pilot at one of Meta’s data center regions – having the RL model directly controlling the supply airflow setpoint. Figure 3 shows a comparison of the new setpoint, in the unit of cubic feet per minute (CFM) as the red line to the original BMS setpoint (as the dotted blue line) over one week’s duration for illustration purposes.

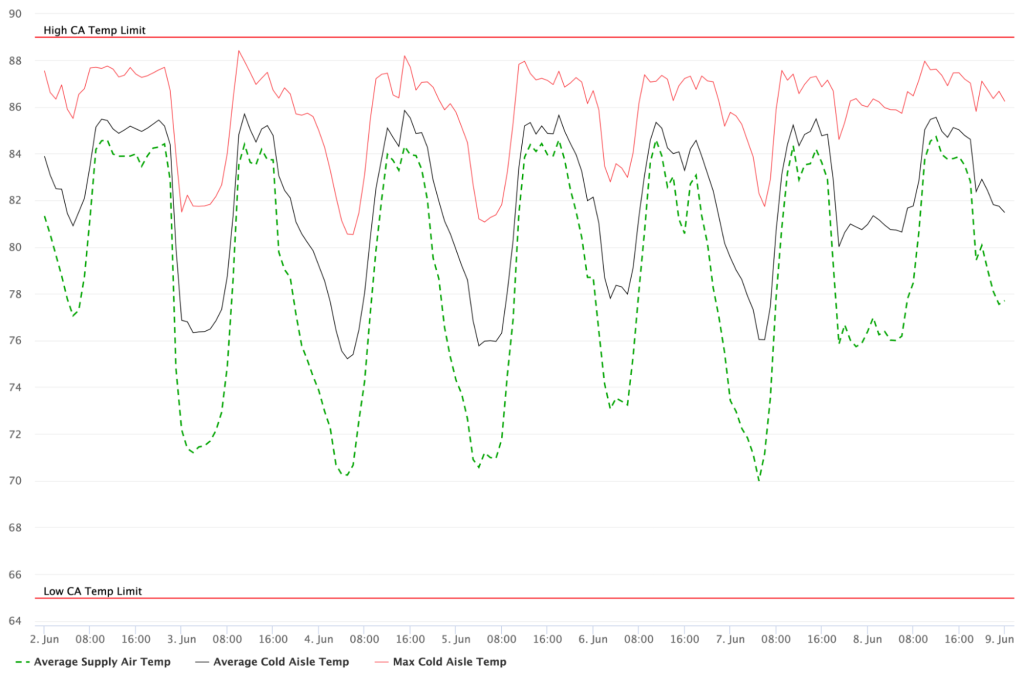

The fluctuation is mainly determined by the supply air temperature and server load cycles at different times of day. More importantly, as shown in Figure 4, the data center temperature conditions never went out of spec, with reduced airflow supply with respect to both cold aisle average and maximum temperature compared against the supply air temperature.

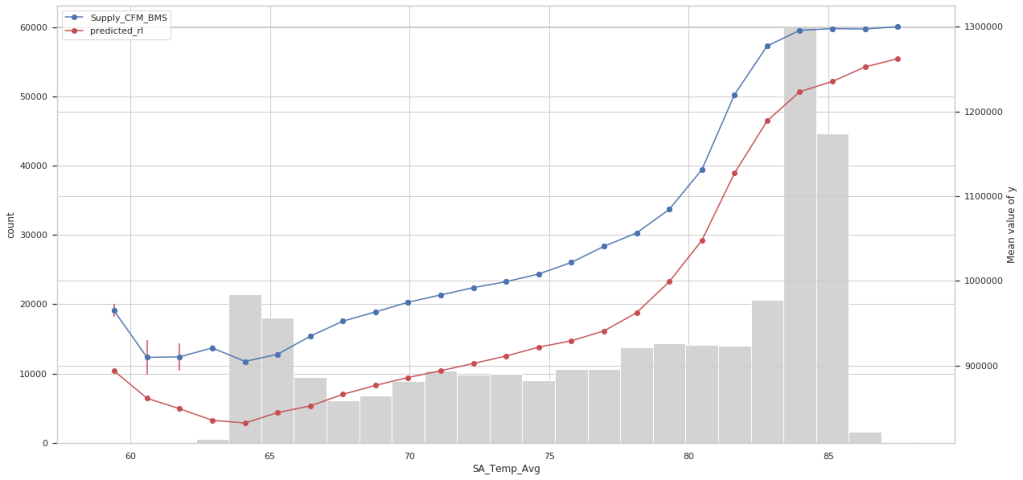

It is noticeable that the CFM savings vary under different supply air temperatures as the univariate chart in Figure 5 shows. The CFM savings can easily be converted to energy savings used by the supply fans. Under hot and dry conditions, when evaporative cooling or humidification is required, using less air will result in less water usage as well. Over the past couple years of the pilot, on average, we were able to reduce the supply fan energy consumption by 20% and water usage by 4% across various weather conditions.

This effort has opened the door to transform how our data centers operate. By introducing automated predictions and continuous optimizations for tuning environment conditions in our data centers we can bend the cost curve and reduce effort on labor intensive tasks.

Meta is breaking ground on new types of data centers that are designed to optimize for artificial intelligence. We plan to apply the same methodology presented here to our future data centers at the design phase to help ensure they’re optimized for sustainability from day one of their operations.

We’re also currently rolling out our RL approach to data center cooling to our existing data centers. Over the couple of years we expect to achieve significant energy and water usage savings to contribute to Meta’s long- term sustainability goals.

We would like to thank our partners in IDC Facility Operations (Butch Howard, Randy Ridgway, James Monahan, Jose Montes, Larame Cummings, Gerson Arteaga Ramirez, John Fabian, and many others) for their support.