Q&A: New Advances in AI-Driven Speech Recognition

Automatic speech recognition (ASR) has been intertwined with machine learning (ML) since the early 1950s, but modern artificial intelligence (AI) techniques—such as deep learning and transformer-based architectures—have revolutionized the field. As a result, automatic speech recognition has evolved from an expensive niche technology into an accessible, near-ubiquitous service. Medical, legal, and customer service providers have relied on ASR to capture accurate records for many years. Now millions of executives, content creators, and consumers also use it to take meeting notes, generate transcripts, or control smart-home devices. In 2023, the global market for speech and automatic speech recognition technology was valued at $12.6 billion—with growth expected to reach nearly $85 billion by 2032.

In this roundtable discussion, two Toptal experts explore the impact that the rapid improvement in AI technology has had on automatic speech recognition. Alessandro Pedori is an AI developer, engineer, and consultant with full-stack experience in machine learning, natural language processing (NLP), and deep neural networks who has used speech-to-text technology in applications for transcribing and extracting actionable items from voice messages, as well as a co-pilot system for group facilitation and 1:1 coaching. Necati Demir, PhD, is a computer scientist, AI engineer, and AWS Certified Machine Learning Specialist with recent experience implementing a video summarization system that utilizes state-of-the-art deep learning methods.

This conversation has been edited for clarity and length.

Exploring How Automatic Speech Recognition Works

First we delve into the details of how automatic speech recognition works under the hood, including system architectures and common algorithms, and then we discuss the trade-offs between different speech recognition systems.

What is automatic speech recognition (ASR)?

Demir: The basic functionality of ASR can be explained in just one sentence: It’s used to translate spoken words into text.

When we talk, sound waves containing layers of frequencies are produced. In ASR, we receive this audio information as input and convert it into sequences of numbers, a format that machine learning models understand. These numbers can then be converted into the required result, which is text.

Pedori: If you’ve ever heard a foreign language being spoken, it doesn’t sound like it contains separate words—it just strikes you as an unbroken wall of sound. Modern ASR systems are trained to take this wall of sound waves (in the form of wave files) and extrapolate the words from it.

Demir: Another very important thing is that the goal of automatic speech recognition is not to understand the intent of human speech itself. The goal is just to convert the data, or, in other words, to transform the speech into text. To use that data in any other way, a separate, dedicated system needs to be integrated with the ASR model.

What is voice recognition, and how is it different from ASR?

Pedori: “Voice recognition” is a rather vague term. It’s often used to mean “speaker identification,” or the verification of who is currently speaking by matching a certain voice to a specific person.

We also have voice detection, which consists of being able to tell whether a certain voice is speaking. Imagine a situation where you have an audio recording with several speakers, but the person relevant to your project is only speaking for 5% of the time. In this case, you’d first run voice detection, which is often more affordable than ASR, on the entire recording. Afterward, you’d use ASR to focus on the part of the audio recording that you need to investigate; in this example, that would be the chunks of conversation spoken by the relevant person.

The main application of voice recognition in audio transcription is called “diarization.” Let’s say we have a speaker named John. When analyzing an audio recording, diarization identifies and isolates John’s voice from other voices, segmenting the audio into sections based on who is speaking at any given moment.

Mostly, voice recognition and ASR differ in how they treat accents. In ASR, to understand the words, you generally want to ignore accents. In voice recognition, however, accents are a great asset: The stronger the accent your speaker has, the easier they are to identify.

One word of caution: Voice recognition can be a cost-effective, valuable tool to use when analyzing speech, but it has limitations. At the moment, it’s becoming increasingly easy to clone voices with the help of AI. You should probably be wary of using voice recognition in privacy-sensitive environments. For example, refrain from using voice recognition as a method of official identification.

Demir: Another limitation that might present itself is when your recording contains the voices of multiple people talking in a noisy or informal setting. Voice recognition might prove to be harder in that situation. For example, this conversation we are having would not be a prime example of clean data when compared to someone recording an e-book in a professional studio environment. This problem exists for ASR systems as well. However, if we’re talking about voice detection, wake words or simple voice commands—such as “Hey Siri”—are simpler for the software to grasp even in noisy acoustic environments.

How do ML models fit into the ASR process?

Demir: If we wished to, we could roughly split the history of speech recognition into two phases: before and after the arrival of deep learning. Before deep learning, the researcher’s task was to identify the correct features in speech. There were three steps: preprocessing the data, building a statistical model, and postprocessing the model’s output.

At the preprocessing stage, features are extracted from sound waves and converted into numbers based on handcrafted rules. After preprocessing is complete, you can fit the resulting numbers into a hidden Markov model, which will attempt to predict each word. But here’s the trick: Before deep learning, we didn’t try to predict the word itself. We tried to predict phonemes—the way a word is pronounced. Take, for instance, the word “five”: That’s one word, but the way it is pronounced sounds something like “F-AY-V.” The system would predict these phonemes and try to convert them into the correct words.

Within a hybrid system, three respective submodels take care of these three steps. The acoustic model is looking for and trying to predict phonemes. The pronunciation model takes the phonemes and predicts which words they should match up to. Finally, the language model—usually an n-gram model—makes another prediction by grouping text into chunks to ensure the text is a statistical match. For example, “a bear in the woods” is likely to be the correct grouping of words, as opposed to the phrase “a bare in the woods,” which is statistically less probable.

Which AI-powered automatic speech recognition tools do you find useful?

Demir: When it comes to ASR tools, OpenAI’s Whisper model is widely recognized for its reliability. This single model is capable of accurately transcribing a variety of speech patterns and accents, even in noisy environments. Hugging Face, a company and open-source community that contributes greatly to machine learning, provides a variety of open-source machine learning models for speech recognition, one of which is Distil-Whisper. This model is a standout example of a high-quality system implemented with deep neural networks. Distil-Whisper is based on the Whisper model, and it maintains robust performance despite being considerably lighter. It’s a great choice for developers working with smaller datasets.

Pedori: Hugging Face has more than 16,000 models dealing with some sort of automatic speech recognition. And Whisper itself can be run in real time, locally, and even as an API. You can even run Whisper in WebAssembly.

ASR evolved from a very tricky system to implement into something simpler—more like optical character recognition (OCR). I don’t want to say it’s a walk in the park, but a developer can now expect at least 95% precision in their results unless the audio is very noisy or the speakers have very heavy accents. And unless you have significantly constrained resources, most ASR requirements can be effectively addressed with deep learning. The current transformer-based models are dominating the industry.

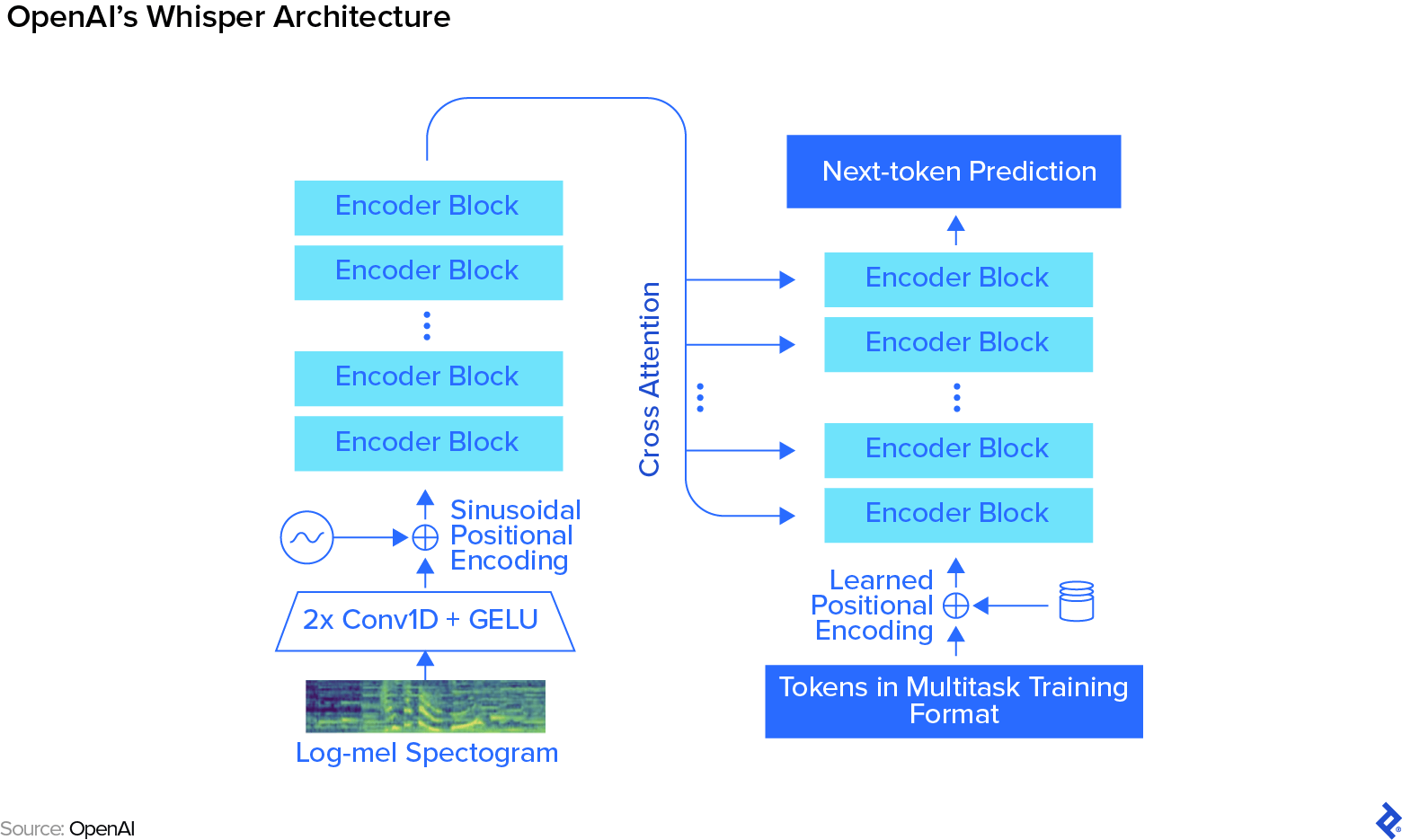

In the deep learning era, however, the process is “end to end”: We input sound waves at one end, and receive the words—technically, “tokens”—at the other end. In an end-to-end model like Whisper, feature extraction is not done anymore. We are just getting the waveform from the acoustic analysis, feeding it to the model, and expecting the model to extract the acoustic features from the data itself, later making a prediction based on these results.

Pedori: With an end-to-end model, everything happens as if it’s inside a magic box that was trained by being fed a massive amount of training data; in Whisper’s case, it’s 680,000 hours of audio. First, the audio data is converted into a log-mel spectrogram—a diagram that represents the audio’s frequency spectrum over time—using acoustic processing. That’s the hardest part for the developer—everything else happens inside the neural network. At its core, it’s a transformer model with different blocks of attention.

Most people just use the model as a black box that you can pass audio to, knowing that you’ll receive words on the other end. It usually performs very well, however, being a black box, it can be a little harder to correct the system when it doesn’t, requiring extra tinkering that can be time-consuming.

What AI algorithms do you prefer for your work with ASR these days?

Pedori: Whisper from OpenAI and NeMo from Nvidia are both transformer-based models that are among the most popular tools on the market. This type of algorithm has revolutionized the field, making natural language processing a lot more agile. In the past, deep learning techniques for ASR involved long short-term memory (LSTM) recurrent neural networks, as well as convolutional neural networks. These performed admirably, however, transformers are state of the art. They’re very easy to parallelize, so you can feed them a huge amount of data and they will scale seamlessly.

Demir: That’s an important reason why we’ve primarily focused on Whisper in this conversation. It’s not the only transformer available, of course. What makes Whisper different is the way it handles huge amounts of imperfect, weakly supervised data; for Whisper, 680,000 hours is the equivalent of 78 years worth of spoken voice, all of it fed into the system at once. Once you have the model trained, you can improve its accuracy by loading pre-trained weights and fine-tuning the network. Fine-tuning is the process of further training a model depending on what behavior you want to see as a result—for example, you could customize your model to enhance precision for terminologies within a certain sector, or optimize it for a specific language.

What features are crucial for high-performing automatic speech recognition systems?

Pedori: Given a specific ASR system, the main “knobs” we have to adjust are word error rate (WER) together with the size and speed of the model. In general, a bigger model will be slower than a smaller one. This is practically the same for every machine learning system. Rarely is a system accurate, inexpensive, and fast; you can typically achieve two of these qualities, but seldom all three.

You can decide if you’re going to run your ASR system locally or via API, and then get the best WER you can for that configuration. Or you can define a WER for an application in advance and then try to get the model that is the best fit for the job. Sometimes, you might have to aim for “good enough,” because finding the best approach takes a lot of engineering time.

How have recent advances in AI affected these key features?

Pedori: Transformer-based models provide pre-trained blocks that are pretty “smart” and more resilient to background noise, but they are harder to control and less customizable. Overall, AI has made it much easier to implement ASR, because models like Whisper and NeMo work pretty well out of the box. By now, they can achieve almost real-time accurate transcriptions on a portable device, depending on the desired WER and the presence of accents in the speech.

Current Use Cases and the Future of ASR

Now let’s discuss the current and future applications of automatic speech recognition in various sectors, along with challenges that must be overcome.

What industries are being revolutionized by ASR?

Pedori: ASR has made it much easier to interact with devices and services via voice—unlike the more fitful experiences of yore, where it was necessary to give a verbal audio signal to instruct the system to take one step, then follow up with a second signal to tell it to take another. ASR has generally opened audio up to most natural language processing techniques. From a user or customer experience perspective, this means you can integrate speech recognition capabilities into your workflows, getting transcripts of your doctor visits or easily converting voicemails into text messages.

Audio-based automatic transcription is becoming extremely common—think of legal transcription and documentation, online courses, content creation in media and entertainment, and customer service, to name a few uses. But ASR is far more than just a transcription tool. Voice assistants are becoming part of our day-to-day lives, and security technology is advancing by integrating voice biometrics for authentication. Automatic speech recognition also supports accessibility by providing subtitles and voice user interfaces for individuals with disabilities.

Humans often like to interact via the use of their voices. Together with large language models, we can now understand a user’s voice pretty well.

What current challenges do developers face in automatic speech recognition technology, and how are they being addressed?

Pedori: If the basic end-to-end system works for your use case out of the box, you can put something together for a client in a few days or even a few hours. But the moment an end-to-end system is insufficient and you have to tinker with it, then the need to spend a couple of months collecting data and doing training runs arises. That’s primarily because the models are quite big. A solution for this hang-up is “knowledge distillation,” which is a way of removing the parts of the model that you don’t need without losing performance, also known as teacher-student training.

Demir: During distillation a new, smaller network (the “student” model) tries to learn from the original, more complex model (the “teacher”). This allows for a more nimble model that gleans information directly from the original and makes the process more affordable without loss of performance. It is comparable in a way to a researcher spending years learning about a particular topic and then teaching what they’ve learned to the students in class in a matter of hours. The student model is trained using predictions gathered from the teacher model. This training data, or “knowledge,” teaches the student model to behave in a manner similar to that of the teacher model. We optimize the student model by handing the same audio input and output to both models and then measuring the performance difference between them.

Pedori: Another technical challenge is accent independence. When Whisper was released, the first thing I did was make a Telegram bot that transcribed long audio messages, because I prefer having messages in text form rather than listening to them. The problem was that the bot’s performance varied greatly depending on whether the sender spoke English natively or as a second language. With a native English speaker, the transcription was perfect. When it was me or my international friends speaking, the bot became a little “imaginative.”

What developments in this field are you most excited about?

Demir: I’m excited to see smaller ASR models with similar performance metrics. It’d be thrilling to see something like a one-megabyte model. As we mentioned, we are “compressing” the models with distillation already. So it’ll be amazing to see how far we can go by progressively compressing a huge amount of knowledge into a few weights within the network.

Pedori: I also look forward to better diarization—better attribution of who’s speaking—because that’s a blocker in some of my projects. But the biggest thing on my wish list would be having an ASR system that can do online learning: a system that could self-teach to understand a specific accent, for example. I can’t see that happening with current architectures, though, because the training and inference—the steps where the model applies what it has learned during training—phases are very separate.

Demir: The world of machine learning seems to be very unpredictable. We’re talking about transformers right now, but that architecture did not even exist when I started my PhD in 2010. The world is changing and adapting very quickly, so it’s hard to predict what kind of new and exciting architectures might be coming up on the horizon.

The technical content presented in this article was reviewed by Nabeel Raza.