Cognitive Prompting in LLMs. Can we teach machines to think like… | by Oliver Kramer | Oct, 2024

Can we teach machines to think like humans?

Introduction

When I started to learn about AI one of the most fascinating ideas was that machines think like humans. But when taking a closer look at what AI and machine learning methods are actually doing, I was surprised there actually is a huge gap between what you can find in courses and books about how humans think, i.e., human cognition, and the way machines do. Examples of these gaps for me were: how a perceptron works, which is often referred to as “inspired by its biological pendant” and how real neurons work. Or how fuzzy logic tries to model human concepts of information and inference and how human inference actually seems to work. Or how humans cluster a cloud of points by looking at it and drawing circles around point clouds on a board and how algorithms like DBSCAN and k-means perform this task.

But now, LLMs like ChatGPT, Claude, and LLaMA have come into the spotlight. Based on billions or even trillions of these artificial neurons and mechanisms that also have an important part to play in cognition: attention (which is all you need obviously). We’ve come a long way, and meanwhile Nobel Prizes have been won to honor the early giants in this field. LLMs are insanely successful in summarizing articles, generating code, or even answering complex questions and being creative. A key point is — no doubts about it—the right prompt. The better you specify what you want from the model, the better is the outcome. Prompt engineering has become an evolving field, and it has even become a specialized job for humans (though I personally doubt the long-term future of this role). Numerous prompting strategies have been proposed: famous ones are Chain-of-thought (CoT) [2] or Tree-of-Thought (ToT) [3] that guide the language model reasoning step by step, mainly by providing the LLM steps of successful problem solving examples. But these steps are usually concrete examples and require an explicit design of a solution chain.

Other approaches try to optimize the prompting, for example with evolutionary algorithms (EAs) like PromptBreeder. Personally I think EAs are always a good idea. Very recently, a research team from Apple has shown that LLMs can easily be distracted from problem solving with different prompts [4]. As there are numerous good posts, also on TDS on CoT and prompt design (like here recently), I feel no need to recap them here in more detail.

What Is Cognitive Prompting?

Something is still missing, as there is obviously a gap to cognitive science. That all got me thinking: can we help these models “think” more like humans, and how? What if they could be guided by what cognitive science refers to as cognitive operations? For example, approaching a problem by breaking it down step by step, to filter out unnecessary information, and to recognize patterns that are present in the available information. Sounds a bit like what we do when solving difficult puzzles.

That’s where cognitive prompting comes in. Imagine the AI cannot only answer your questions but also guide itself — and you when you read its output — through complex problem-solving processes by “thinking” in structured steps.

Imagine you’re solving a math word problem. The first thing you do is probably to clarify your goal: What exactly do I need to figure out, what is the outcome we expect? Then, you break the problem into smaller steps, a promising way is to identify relevant information, and perhaps to notice patterns that help guiding your thoughts closer toward the desired solution. In this example, let’s refer to these steps as goal clarification, decomposition, filtering, and pattern recognition. They are all examples of cognitive operations (COPs) we perform instinctively (or which we are taught to follow by a teacher in the best case).

But How Does This Actually Work?

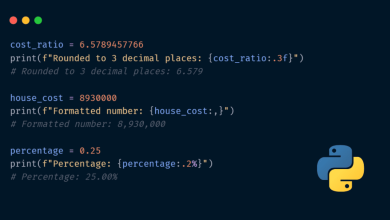

Here’s how the process unfolded. We define a sequence of COPs and ask the LLM to follow the sequence. Figure 1 shows an example of what the prompt looks like. Example COPs that turn out to be important are:

- Goal Clarification: The model first needed to restate the problem in a clear way — what exactly is it trying to solve, what is the desired outcome?

- Decomposition: Next, break the problem into manageable chunks. Instead of getting overwhelmed by all the information available, the model should focus on solving smaller parts — one at a time.

- Filtering: Ask the model to filter out unnecessary details, allowing it to focus on what really matters. This is often necessary to allow the model to put attention on the really important information.

- Pattern Recognition: Identify patterns to solve the problem efficiently. For example, if a problem involves repeated steps, ask the model to recognize a pattern and apply it.

- Integration: In the end it makes sense to synthesize all insights of the previous steps, in particular based on the last COPs and integrate them into a solution for the final answer.

These structured steps mimic the way humans solve problems — logically, step by step. There are numerous further cognitive operations and the choice which to choose, which order and how to specify them for the prompt. This certainly leaves room for further improvement.

We already extended the approach in the following way. Instead of following a static and deterministic order of COPs, we give the model the freedom to choose its own sequence of COPs based on the provided list — called reflective and self-adaptive cognitive prompting. It turns out that this approach works pretty well. In the next paragraph we compare both variants on a benchmark problem set.

What also turns out to improve the performance is adapting the COP descriptions to the specific problem domain. Figure 1, right, shows an example of a math-specific adaptation of the general COPs. They “unroll” to prompts like “Define each variable clearly” or “Solve the equations step by step”.

In practice, it makes sense to advise the model to give the final answer as a JSON string. Some LLMs do not deliver a solution, but Python code to solve the problem. In our experimental analysis, we were fair and ran the code treating the answer as correct when the Python code returns the correct result.

Example

Let’s give a short example asking LLaMA3.1 70B to solve one of the 8.5k arithmetic problems from GSM8K [5]. Figure 2 shows the request.

Figure 3 shows the model’s output leading to a correct answer. It turns out the model systematically follows the sequence of COPs — even providing a nice problem-solving explanation for humans.

How Does Cognitive Prompting Perform — Scientifically?

Now, let’s become a little more systematic by testing cognitive prompting on a typical benchmark. We tested it on a set of math problems from the GSM8K [5] dataset — basically, a collection of math questions you’d find in grade school. Again, we used Meta’s LLaMA models to see if cognitive prompting could improve their problem-solving skills, appliying LLaMA with 8 billion parameters and the much larger version with 70 billion parameters.

Figure 4 shows some results. The smaller model improved slightly with deterministic cognitive prompting. Maybe it is not big enough to handle the complexity of structured thinking. When it selects an own sequence of COPs, the win in performance is significantly.

Without cognitive prompting, the larger model scored about 87% on the math problems. When we added deterministic cognitive prompting (where the model followed a fixed sequence of cognitive steps), its score jumped to 89%. But when we allowed the model to adapt and choose the cognitive operations dynamically (self-adaptive prompting), the score shot up to 91%. Not bad for a machine getting quite general advice to reason like a human — without additional examples , right?

Why Does This Matter?

Cognitive prompting is a method that organizes these human-like cognitive operations into a structured process and uses them to help LLMs solve complex problems. In essence, it’s like giving the model a structured “thinking strategy” to follow. While earlier approaches like CoT have been helpful, cognitive prompting offers even deeper reasoning layers by incorporating a variety of cognitive operations.

This has exciting implications beyond math problems! Think about areas like decision-making, logical reasoning, or even creativity — tasks that require more than just regurgitating facts or predicting the next word in a sentence. By teaching AI to think more like us, we open the door to models that can reason through problems in ways that are closer to human cognition.

Where Do We Go From Here?

The results are promising, but this is just the beginning. Cognitive prompting could be adapted for other domains for sure, but it can also be combined with other ideas from AI As we explore more advanced versions of cognitive prompting, the next big challenge will be figuring out how to optimize it across different problem types. Who knows? Maybe one day, we’ll have AI that can tackle anything from math problems to moral dilemmas, all while thinking as logically and creatively as we do. Have fun trying out cognitive prompting on your own!

References

[1] O. Kramer, J. Baumann. Unlocking Structured Thinking in Language Models with Cognitive Prompting (submission to ICLR 2025)

[2] J. Wei, X. Wang, D. Schuurmans, M. Bosma, B. Ichter, F. Xia, E. H. Chi, Q. V. Le, and D. Zhou. Chain-of-thought prompting elicits reasoning in large language models. In S. Koyejo, S. Mohamed, A. Agarwal, D. Bel- grave, K. Cho, and A. Oh, editors, Neural Information Processing Systems (NeurIPS) Workshop, volume 35, pages 24824–24837, 2022

[3] S. Yao, D. Yu, J. Zhao, I. Shafran, T. Griffiths, Y. Cao, and K. Narasimhan. Tree of thoughts: Deliberate problem solving with large language models. In Neural Information Processing Systems (NeurIPS), volume 36, pages 11809–11822, 2023

[4] I. Mirzadeh, K. Alizadeh, H. Shahrokhi, O. Tuzel, S. Bengio, and M. Farajtabar. GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models. 2024.

[5] K. Cobbe, V. Kosaraju, M. Bavarian, M. Chen, H. Jun, L. Kaiser, M. Plap- pert, J. Tworek, J. Hilton, R. Nakano, C. Hesse, and J. Schulman. Training verifiers to solve math word problems. arXiv preprint arXiv:2110.14168, 2021.