Advanced Data Labeling Methods for Machine Learning

When developing machine learning (ML) models, the quality and granularity of labeled data have a direct impact on performance. Labeling methods encompass a wide range of techniques, from fully manual, in which subject matter experts (SMEs) label all data by hand, to fully automated, in which software tools algorithmically apply labels. Manual labeling generally yields the highest quality results but can be time-consuming and expensive, whereas automated labeling may be faster and more efficient, but often at the cost of accuracy or granularity.

In practice, hybrid approaches—combining manual and automated techniques throughout the process—are generally considered to be the most effective. And with the rise in popularity and accessibility of large language models (LLMs), there are an increasing number of ways in which software can augment and accelerate the work of human annotators. Nonetheless, it’s important to understand where and when the necessity for human involvement persists.

This article examines a variety of advanced data labeling methods, exploring their real-world applications and use cases. We consider the strengths and limitations of each technique across different modalities, such as text, images, videos, and audio data, and offer guidance for selecting the most appropriate techniques based on project-specific requirements.

Automated Labeling Techniques

Fully automated labeling techniques encompass a variety of methods that aim to eliminate the need for human intervention. They’re particularly beneficial in industries that manipulate large volumes of data and need to prioritize processing speed. For example, the e-commerce industry uses automated labeling for product categorization; in finance, automated labeling can be used for fraud detection by classifying transactional data. Although these approaches are deployed, hybrid techniques that incorporate human verification are more common due to the complexity and variability of real-world data.

Rule-based labeling is a common automated technique that relies on a set of predefined rules or heuristics that automatically assign labels to data points based on specific criteria or patterns identified by domain experts. As such, this makes it particularly useful for structured data with clear, predictable patterns that can be exploited well (e.g., using regular expressions for text).

Another popular option is clustering-based labeling, which involves grouping similar data points together using unsupervised learning algorithms, and then assigning labels to these clusters based on their shared characteristics. This technique can be useful when segmenting groups of people based on purchasing behavior or demographics.

The use of generative models, pattern recognition, and classification techniques can assist in automated labeling, but special caution is needed when applying these methods to avoid introducing any biases or systemic errors that the new model would inherit. Generative adversarial networks (GANs) and multimodal LLMs like GPT can help create synthetic data with corresponding labels, which can augment existing labeled datasets or create new ones when labeled data is scarce. Pattern recognition and classification techniques involve training models on labeled datasets to learn patterns; the trained models can then be used to label new, unlabeled data.

When it comes to the execution of automated labeling, Python is the dominant programming language, and there are several libraries, models, and frameworks that can aid in the process. TensorFlow and PyTorch both offer libraries for building deep learning models, while scikit-learn provides clustering algorithms and machine learning tools for pattern recognition and classification. For synthetic data creation, OpenAI, Google, Anthropic, and other startups in the AI (artificial intelligence) space provide robust APIs for utilizing their existing models (such as GPT, Gemini, and Claude, respectively). Rule-based systems can be implemented using custom scripts or platforms like Drools.

Hybrid Labeling Techniques

With traditional labeling techniques, all annotations are created manually; hybrid labeling techniques, however, blend automated systems with human expertise, greatly improving efficiency and accuracy. We’ll cover three common methods—semi-supervised, active, and weak—that can be used individually or in unison to achieve effective hybrid labeling.

Semi-supervised Learning

Semi-supervised learning (SSL) is an approach that combines a small amount of labeled data with a larger set of unlabeled data. This method is cost-effective and improves model performance by using the unlabeled data to gain additional insights. Whereas supervised learning is too slow and costly, and unsupervised learning yields inaccurate results, SSL strikes a balance by combining the strengths of both approaches. Because unlabeled data is cheap and easy to access, SSL has a wide range of applications across industries and use cases. Techniques include self-training, where a model labels the unlabeled data and retrains itself with high-confidence predictions, and graph-based methods that use data similarity to propagate labels.

SSL works well in domains where manual labeling is impractical due to resource constraints. For example, image and speech recognition benefit from using SSL to handle vast amounts of data without exhaustive labeling. In natural language processing (NLP), this learning approach can aid in tasks like sentiment analysis by utilizing unlabeled text to discern linguistic patterns that would be costly to label manually. Meta has effectively utilized semi-supervised learning, specifically the self-training method, to enhance its speech recognition models. Initially, the company trained the base model using 100 hours of human-annotated audio data. It then incorporated 500 hours of unlabeled speech data, employing self-training to further boost the models’ performance.

The downside to SSL is that its success is dependent on the quality of the labeled data. Inaccuracies in this small dataset can propagate throughout the model, leading to suboptimal performance. Moreover, semi-supervised algorithms often involve intricate architectures that necessitate meticulous tuning to function correctly.

Active Learning

Active learning is a form of SSL where the model selects the most informative data points and sends them to human annotators to be labeled. This selective process is iterative, with the model querying human annotators about labels for which it has the least confidence or which are most likely to improve its performance. The technical methods used in active learning include uncertainty sampling (the model requests labels for the instances it’s least certain about); query by committee (multiple models vote on labeling, and the most contentious points are presented for annotation); and expected model change (labels are requested for data points that would most impact the model’s parameters if included in the training set).

Active learning has broad applications across use cases involving categorization, classification, and image recognition. In the context of classifying medical images for pneumonia detection, for example, active learning involves training an initial model on a small set of labeled X-rays; the model then selects the most uncertain images from a large pool of unlabeled images for radiologists to label. This process is repeated, progressively improving the model’s accuracy with each cycle by focusing on the most informative samples.

The main advantage of active learning is its potential to reduce labeling costs significantly while still building robust models. However, it relies on the initial model being good enough to identify informative data points. Additionally, the iterative nature of active learning can be more time-consuming than other methods, as it involves multiple rounds of training and annotation.

Weak Supervision

The data labeling strategy behind weak supervision is to train models by blending various data sources that may be imperfect, noisy, or approximations. These sources might include low-quality labeled data from nonexperts, older pretrained models which may be biased, or high-level supervision by SMEs in the form of simple heuristics such as “if data is x, then label as y.”

The synthesis of these noisy labels into a coherent training set is the technical backbone of weak supervision. Techniques like data programming allow for the combination of different labeling functions, taking into account their correlations and accuracies, to produce a probabilistic label for each data point.

Weak supervision is particularly beneficial for projects when high-quality labeled data is scarce or expensive to collect, such as medical image analysis; when annotations require expert knowledge; or in web data extraction, where the sheer volume of data makes manual labeling impractical.

The primary advantage of weak supervision is its scalability, which allows for the rapid creation of large labeled datasets. It also democratizes the machine learning process by enabling nonexperts to contribute to the labeling effort through simple rules or heuristics. That said, the quality of the resulting model is heavily dependent on the quality and diversity of the labeling functions. If these functions are too noisy or correlated, they can introduce bias or systematic errors into the training data.

Combined Methods

The data labeling techniques we’ve discussed—semi-supervised learning, active learning, and weak supervision—can often be complementary and, in many cases, are used in conjunction to address the challenges of data labeling in machine learning.

Semi-supervised learning can be paired with active learning to create a powerful iterative process. Initially, a model can be trained on a small labeled dataset to make predictions on unlabeled data. Active learning can then be employed to selectively label the most informative of the unlabeled instances as identified by the semi-supervised model. This iterative process will continue, with the model improving as it receives more labeled data, thus reducing the overall labeling effort while enhancing the model’s performance.

Weak supervision can also be integrated into this process: Labeling functions used in weak supervision can provide an initial set of noisy labels, which can serve as a starting point for semi-supervised learning. The model can then refine its understanding of the data distribution, and active learning can be used to further improve the model by requesting human annotators to label the most uncertain data points.

One real-world example: When developing a pneumonia detection model from chest X-rays, a healthcare startup first uses semi-supervised learning by training the model on a small labeled dataset and generating pseudo-labels for unlabeled images. It enhances this with weak supervision by applying heuristic rules and external knowledge to create additional weak labels, and then employs active learning to iteratively select and label the most uncertain images, refining the model’s accuracy with minimal labeled data.

While different techniques can be used together, it’s important to consider the specific characteristics of the dataset and the task at hand. The success of combining these methods depends on factors such as the quality and representativeness of the initial labeled data, the ability to define informative labeling functions for weak supervision, and the model’s capacity to identify truly informative samples for active learning.

Modality-specific Approaches

For different data modalities, such as images, videos, text, and audio data, specialized labeling techniques may be necessary to handle the unique challenges and characteristics of each type.

Computer Vision

In the realm of computer vision, data labeling is a critical step in training models to accurately interpret and understand visual information. Object detection, used to identify the position of objects of interest in an image (e.g., where a car is on the road), is a fundamental application of computer vision. Its successful execution requires training data in which images are annotated with bounding boxes that delineate the boundaries of various objects. These annotations provide the models with the spatial coordinates and dimensions of objects within an image, which is essential for tasks such as surveillance and face recognition.

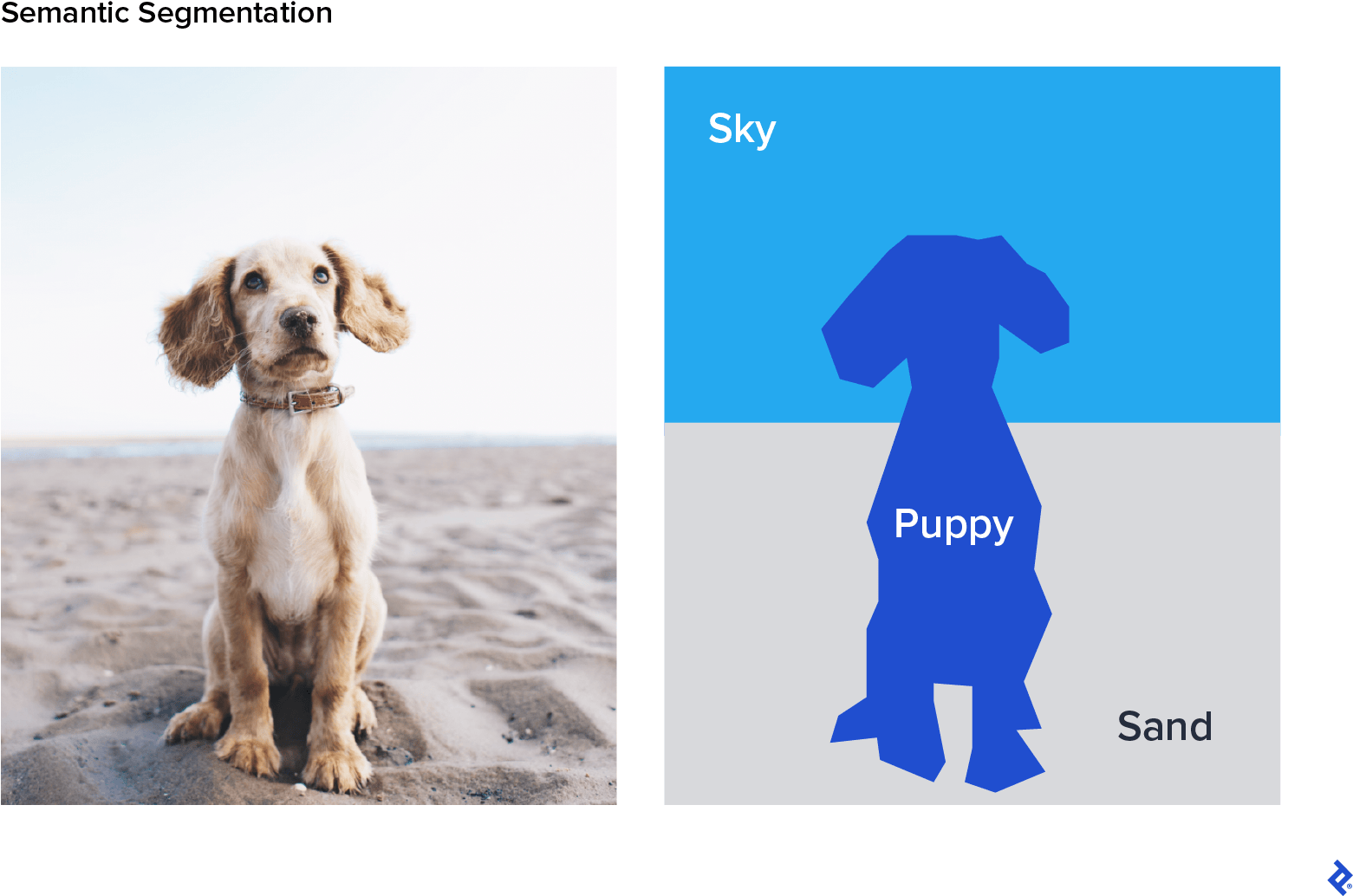

Semantic segmentation takes object detection a step further by classifying each pixel in an image into categories defined from a known set of labels and then generating a segmentation mask of the input images. This pixel-level precision provides more precise object boundaries and enables models to gain a granular, three-dimensional understanding of the scene, which is crucial for applications like self-driving cars, where understanding the road environment in detail is necessary for safe navigation.

Building an ML model for semantic segmentation requires a labeled dataset at the pixel level and human annotators who can get involved at various levels of granularity. SMEs can establish ground truth by identifying the contents of an image (e.g., “this is a photo of a car”) at a high level, and then individual pixels can be labeled through techniques such as grouping together those with similar colors or drawing polygons around the relevant objects.

Labeling images and videos is particularly labor-intensive, but there are several specialized platforms that can streamline the process. These tools often come with features like automated label suggestions, which can accelerate the labeling process by providing pre-labeled data that annotators can then refine. They also typically include quality control workflows to ensure the accuracy of the labels:

- Labelbox features tools for a variety of annotation types, including image classification and segmentation, and integrates with machine learning workflows through its API, facilitating both the creation and management of labeled data at scale.

- CVAT, developed by Intel, is an open-source annotation platform that allows for detailed labeling of images and videos, with a focus on customizability and extensibility to accommodate specific annotation requirements of different computer vision projects.

- SuperAnnotate employs artificial intelligence to pre-annotate images, which annotators can then refine, optimizing the labeling process for accuracy and efficiency, particularly in large-scale annotation efforts that require rigorous quality control and collaboration.

Natural Language Processing

In the realm of NLP, named entity recognition (NER) is crucial for information extraction, enabling the transformation of unstructured text into structured data that can be used in various applications. For instance, NER is instrumental in powering search engines, recommendation systems, and content classification tools. In order for NER to work properly, words or phrases that identify entities such as names, locations, and organizations must be labeled accurately. This task is often referred to as sequence labeling because the model needs to understand how words are used in context: When given a sentence such as “Lincoln was a good man,” sequence classifiers would indicate that “Lincoln” refers to the name of a man (rather than, say, Lincoln, Nebraska).

Sentiment analysis is another vital task in NLP: Large volumes of text are analyzed to determine whether they contain positive, negative, or neutral opinions. This is particularly important for analyzing and interpreting customer feedback, social media conversations, and product reviews. By understanding the sentiment behind text data, businesses can gain insights into consumer attitudes and preferences, which can inform marketing strategies, product development, and customer service practices. Sentiment analysis requires a labeling process in which pieces of text are labeled according to the feelings they convey. Weak supervision is commonly employed for sentiment analysis: Human annotators can provide heuristics for positive and negative sentiments that a model can quickly apply across thousands or millions of data points. For example, “If a text block includes the words ‘terrible’ or ‘horrible,’ then label it as negative.”

Automated techniques such as leveraging large language models can significantly expedite the NLP data labeling process. LLMs can be trained to predict labels for a dataset, providing a preliminary layer of annotation. This pre-labeled data can serve as a starting point for further refinement, which can be achieved through hybrid methods like active learning. However, the reliance on LLMs also introduces potential drawbacks, such as the propagation of biases present in the training data and the need for careful oversight to ensure the accuracy of the annotations.

Audio Data Applications

Audio data applications encompass speech recognition, transcription, and audio event recognition. Transcription involves converting spoken language within an audio clip into corresponding text. This process is fundamental for creating datasets for speech recognition systems, which power virtual assistants, automated captioning services, and voice-controlled devices. Automated transcription is typically performed using advanced speech recognition models that have been trained on large, diverse datasets to accurately capture language nuances, accents, and dialects. Labeling data for these speech recognition models has traditionally been performed by human annotators, but models can be trained to augment the work of SMEs via SSL or active learning.

Another aspect of audio data labeling is audio event annotation, where the goal is to identify and categorize specific nonspeech sounds within an audio clip, such as clapping, engine noises, or musical instruments. This task is essential for building systems that can understand and respond to the broader acoustic environment, such as sound-based surveillance systems, wildlife monitoring, and urban sound analysis. Pattern recognition algorithms are often employed to detect and label these audio events, leveraging features extracted from the sound waves to distinguish between different types of sounds. Audio event annotation can benefit from automated labeling techniques but may require human verification or supervision to ensure accuracy, as models can struggle with poor audio quality, overlapping sounds, or complex acoustic environments. Annotators can correct errors and confirm the presence of audio events, leading to more reliable datasets for training.

Multimodal Labeling

Multimodal labeling techniques involve the simultaneous annotation of data that combines multiple types of modalities, such as video that includes both audio and visual elements. Some of the most common use cases for multimodal labeling include autonomous vehicle navigation, where models must interpret and integrate visual, audio, and sensor data; and medical diagnostics, where a system designed to diagnose conditions from patient data could combine medical imaging with textual clinical notes. Multimodal labeling is achieved through a combination of modality-specific models and algorithms.

Using LLMs for Efficient Data Labeling

LLMs hold the potential to transform the landscape of data labeling, especially within the domain of NLP. These models, trained on an extensive body of text, are able to make sense of nuances in human language, which enables them to perform complex labeling tasks to a level of sophistication previously unattainable with simpler automated methods. For NER labeling tasks, LLMs can be fine-tuned to identify and label specific entities within text. This is invaluable for tasks like extracting product names from reviews or identifying locations mentioned in travel blogs.

LLMs also play a major role in data augmentation: They can generate additional training data examples to help create more robust machine learning models. This is especially useful when labeled data is limited or expensive to obtain. For example, LLMs can create synthetic customer inquiries for chatbots or expand language datasets to include diverse dialects and idioms. To do this with ChatGPT, you might start with the following prompt:

“I am training a customer support chatbot with limited actual inquiries. One inquiry is: ‘How can I reset my password?’ Generate five synthetic examples similar to the inquiry as a Python list and provide only the Python list as the output.”

ChatGPT would provide this as a response:

[

“How do I reset my password?”,

“Can you show me how to reset my password?”,

“What’s the process for resetting my password?”,

“I need help resetting my password, how can I do that?”,

“Could you guide me on how to reset my password?”

]

Active learning is another area in which LLMs have made a significant impact. By pre-labeling data and identifying instances where the model’s predictions are least confident, LLMs can direct human annotators’ efforts to the most valuable areas, thereby creating a more efficient labeling process. This is particularly useful in continuously evolving fields like news categorization, where topics can change rapidly and models must quickly adapt.

LLMs can also classify text into categories they haven’t explicitly seen during training using zero-shot learning (ZSL). This advanced capability is possible when the model is trained to recognize the semantic relationship between the text and the label descriptions, allowing for flexible and dynamic labeling without the need for extensive retraining. ZSL can be particularly beneficial in areas like content moderation, where new forms of inappropriate content constantly emerge. In practice, though, zero-shot learning generally requires that the model has at least seen text that’s similar to what it’s classifying, or else its accuracy will suffer.

Despite these varied use cases, there are some challenges when LLMs are used to label data. The quality of the output can vary widely, and there is a risk of models perpetuating biases present in their training data. Moreover, LLMs can sometimes generate plausible but incorrect labels, or miss subtle context cues that a human annotator would catch. As a result, human oversight is essential to ensure the accuracy of the labeling process. Annotators should review and correct the work of LLMs, providing a feedback loop that can be used to further refine the models. This collaborative approach leverages the efficiency of LLMs while maintaining the high-quality standards that only human judgment can ensure.

Evaluating Data Labeling Techniques

Choosing the ideal data labeling techniques hinges on finding the right balance between speed, cost, and accuracy for your specific use case. Poor data quality is cited as one of the main reasons why AI and ML projects might take longer, cost more, and deliver less than expected, so it’s crucial to get this right. Automated methods, particularly those involving LLMs for text data, can label data at a pace and cost unattainable by humans, but the quality often suffers in the absence of human verification.

From a practical perspective, it’s often wise to begin with an automated approach if possible, verifying the quality on a subset of the data to determine whether it’s acceptable. If the quality is poor, then consider implementing the hybrid techniques that are most relevant to the data modality.

Looking ahead, the future of data labeling methodologies will continue to be heavily influenced by advancements in AI and ML, with LLMs playing a central role, especially in the realm of NLP. As these models continue to evolve, we can expect them to become even more sophisticated in their processing and generation of human language, leading to ever more accurate and nuanced labeling capabilities. Moreover, the development of domain-specific LLMs tailored to particular industries or tasks, such as in healthtech for preventive care, could provide even greater precision and relevance in labeling efforts.

In addition to LLMs, the rise of multimodal AI models that can process and integrate information from various data types—text, images, and audio—will expand the scope of automated labeling to increasingly more complex and diverse datasets. These advancements will not only improve the speed and reduce the costs associated with data labeling methods, but also open up new possibilities for creating datasets that were previously too challenging to label manually. Despite the increasing capabilities of AI, human oversight will continue to be critical. The synergy between human expertise and AI-driven automation will continue to shape the future of data labeling, making it more scalable, accessible, and adaptable to the demands of the data-driven world.

The technical content presented in this article was reviewed by Jedrzej Kardach and Tayyab Nasir.