GenAI and the Role of GraphRAG in Expanding LLM Accuracy

Daniel D. Gutierrez, Editor-in-Chief & Resident Data Scientist, insideAI News, is a practicing data scientist who’s been working with data long before the field came in vogue. He is especially excited about closely following the Generative AI revolution that’s taking place. As a technology journalist, he enjoys keeping a pulse on this fast-paced industry.

Generative AI, or GenAI, has seen exponential growth in recent years, largely fueled by the development of large language models (LLMs). These models possess the remarkable ability to generate human-like text and provide answers to an array of questions, driving innovations across diverse sectors from customer service to medical diagnostics. However, despite their impressive language capabilities, LLMs face certain limitations when it comes to accuracy, especially in complex or specialized knowledge areas. This is where advanced retrieval-augmented generation (RAG) techniques, particularly those involving graph-based knowledge representation, can significantly enhance their performance. One such innovative solution is GraphRAG, which combines the power of knowledge graphs with LLMs to boost accuracy and contextual understanding.

The Rise of Generative AI and LLMs

Large language models, typically trained on vast datasets from the internet, learn patterns in text, which allows them to generate coherent and contextually relevant responses. However, while LLMs are proficient at providing general information, they struggle with highly specific queries, rare events, or niche topics that aren’t as well-represented in their training data. Additionally, LLMs are prone to “hallucinations,” where they generate plausible-sounding but inaccurate or entirely fabricated answers. These hallucinations can be problematic in high-stakes applications where precision and reliability are paramount.

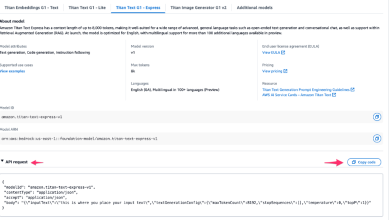

To address these challenges, developers and researchers are increasingly adopting RAG methods, where the language model is supplemented by external knowledge sources during inference. In RAG frameworks, the model is able to retrieve relevant information from databases, structured documents, or other repositories, which helps ground its responses in factual data. Traditional RAG implementations have primarily relied on textual databases. However, GraphRAG, which leverages graph-based knowledge representations, has emerged as a more sophisticated approach that promises to further enhance the performance of LLMs.

Understanding Retrieval-Augmented Generation (RAG)

At its core, RAG is a technique that integrates retrieval and generation tasks in LLMs. Traditional LLMs, when posed with a question, generate answers purely based on their internal knowledge, acquired from their training data. In RAG, however, the LLM first retrieves relevant information from an external knowledge source before generating a response. This retrieval mechanism allows the model to “look up” information, thereby reducing the likelihood of errors stemming from outdated or insufficient training data.

In most RAG implementations, information retrieval is based on semantic search techniques, where the model scans a database or corpus for the most relevant documents or passages. This retrieved content is then fed back into the LLM to help shape its response. However, while effective, this approach can still fall short when the complexity of information connections exceeds simple text-based searches. In these cases, the semantic relationships between different pieces of information need to be represented in a structured way — this is where knowledge graphs come into play.

What is GraphRAG?

GraphRAG, or Graph-based Retrieval-Augmented Generation, builds on the RAG concept by incorporating knowledge graphs as the retrieval source instead of a standard text corpus. A knowledge graph is a network of entities (such as people, places, organizations, or concepts) interconnected by relationships. This structure allows for a more nuanced representation of information, where entities are not just isolated nodes of data but are embedded within a context of meaningful relationships.

By leveraging knowledge graphs, GraphRAG enables LLMs to retrieve information in a way that reflects the interconnectedness of real-world knowledge. For example, in a medical application, a traditional text-based retrieval model might pull up passages about symptoms or treatment options independently. A knowledge graph, on the other hand, would allow the model to access information about symptoms, diagnoses, and treatment pathways in a way that reveals the relationships between these entities. This contextual depth improves the accuracy and relevance of responses, especially in complex or multi-faceted queries.

How GraphRAG Enhances LLM Accuracy

- Enhanced Contextual Understanding: GraphRAG’s knowledge graphs provide context that LLMs can leverage to understand the nuances of a query better. Instead of treating individual facts as isolated points, the model can recognize the relationships between them, leading to responses that are not only factually accurate but also contextually coherent.

- Reduction in Hallucinations: By grounding its responses in a structured knowledge base, GraphRAG reduces the likelihood of hallucinations. Since the model retrieves relevant entities and their relationships from a curated graph, it’s less prone to generating unfounded or speculative information.

- Improved Efficiency in Specialized Domains: Knowledge graphs can be customized for specific industries or topics, such as finance, law, or healthcare, enabling LLMs to retrieve domain-specific information more efficiently. This customization is especially valuable for companies that rely on specialized knowledge, where conventional LLMs might fall short due to gaps in their general training data.

- Better Handling of Complex Queries: Traditional RAG methods might struggle with complex, multi-part queries where the relationships between different concepts are crucial for an accurate response. GraphRAG, with its ability to navigate and retrieve interconnected knowledge, provides a more sophisticated mechanism for addressing these complex information needs.

Applications of GraphRAG in Industry

GraphRAG is particularly promising for applications where accuracy and contextual understanding are essential. In healthcare, it can assist doctors by providing more precise information on treatments and their associated risks. In finance, it can offer insights on market trends and economic factors that are interconnected. Educational platforms can also benefit from GraphRAG by offering students richer and more contextually relevant learning materials.

The Future of GraphRAG and Generative AI

As LLMs continue to evolve, the integration of knowledge graphs through GraphRAG represents a pivotal step forward. This hybrid approach not only improves the factual accuracy of LLMs but also aligns their responses more closely with the complexity of real-world information. For enterprises and researchers alike, GraphRAG offers a powerful tool to harness the full potential of generative AI in ways that prioritize both accuracy and contextual depth.

In conclusion, GraphRAG stands as an innovative advancement in the GenAI ecosystem, bridging the gap between vast language models and the need for accurate, reliable, and contextually aware AI. By weaving together the strengths of LLMs and structured knowledge graphs, GraphRAG paves the way for a future where generative AI is both more trustworthy and impactful in decision-critical applications.

Sign up for the free insideAI News newsletter.

Join us on Twitter: https://twitter.com/InsideBigData1

Join us on LinkedIn: https://www.linkedin.com/company/insideainews/

Join us on Facebook: https://www.facebook.com/insideAINEWSNOW

Check us out on YouTube!