GeoCoder: Enhancing Geometric Reasoning in Vision-Language Models through Modular Code-Finetuning and Retrieval-Augmented Memory

Geometry problem-solving relies heavily on advanced reasoning skills to interpret visual inputs, process questions, and apply mathematical formulas accurately. Although vision-language models (VLMs) have shown progress in multimodal tasks, they still face significant limitations with geometry, particularly in executing unfamiliar mathematical operations, like calculating the cosine of non-standard angles. This challenge is amplified due to autoregressive training, which emphasizes next-token prediction, often leading to inaccurate calculations and formula misuse. While methods like Chain-of-Thought reasoning and mathematical code generation offer some improvement, these approaches still need to improve with correctly applying geometry concepts and formulas in complex, multi-step problems.

The study reviews research on VLMs and code-generating models for solving geometry problems. While general-purpose VLMs have progressed, they often struggle with geometric reasoning, as shown through new datasets designed to benchmark these tasks. Neuro-symbolic systems have been developed to enhance problem-solving by combining language models with logical deduction. Further advancements in language models for mathematical reasoning enable code-based solutions, but these often need more multimodal capabilities.

Researchers from Mila, Polytechnique Montréal, Université de Montréal, CIFAR AI, and Google DeepMind introduce GeoCoder, a VLM approach designed for solving geometry problems through modular code generation. GeoCoder uses a predefined geometry function library to execute code accurately and reduce errors in formula applications, offering consistent and interpretable solutions. They also present RAG-GeoCoder, a variant with retrieval-augmented memory, enabling it to pull functions directly from the geometry library, minimizing reliance on internal memory. GeoCoder and RAG-GeoCoder achieve over a 16% performance boost on geometry tasks, demonstrating enhanced reasoning and interpretability on complex multimodal datasets.

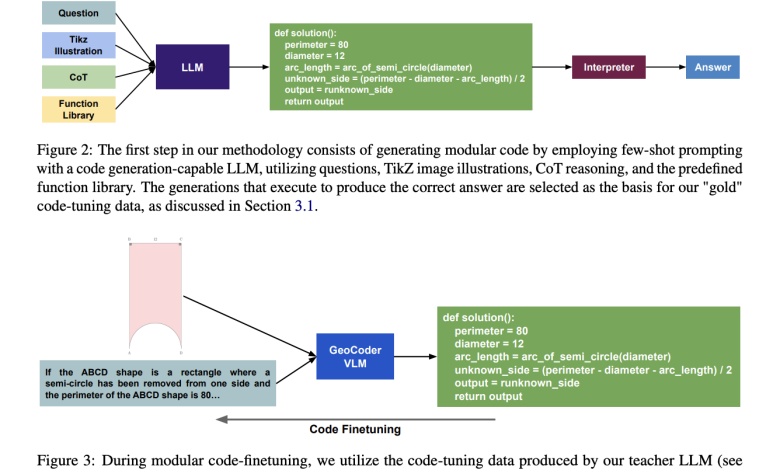

The proposed method introduces GeoCoder, a VLM fine-tuned to solve geometry problems by generating modular Python code that references a predefined geometry function library. Unlike traditional CoT fine-tuning, this approach ensures accurate calculations and reduces formula errors by directly executing the generated code. GeoCoder uses a knowledge-distillation process to create high-quality training data and interpretable function outputs. Additionally, RAG-GeoCoder, a retrieval-augmented version, employs a multimodal retriever to select relevant functions from memory for more precise code generation, enhancing the model’s problem-solving ability by reducing reliance on internal memory alone.

On the GeomVerse dataset, code-finetuned models significantly outperform CoT-finetuned models, particularly with RAG-GeoCoder surpassing the prior state-of-the-art, PaLI 5B by 26.2-36.3% across depths. On GeoQA-NO, GeoCoder achieves a 42.3% relaxed accuracy, outperforming CoT-finetuned LLaVA 1.5 by 14.3%. Error analysis reveals that RAG-GeoCoder reduces syntax errors but increases name errors at higher depths due to retrieval limitations. Moreover, RAG-GeoCoder enhances interpretability and accuracy by using templated print functions and applying functions 17% more frequently than GeoCoder, demonstrating better modular function usage across problem depths.

In conclusion, GeoCoder introduces a modular code-finetuning approach for geometry problem-solving in VLMs, achieving consistent improvement over CoT-finetuning by enabling accurate, deterministic calculations. GeoCoder enhances interpretability and reduces formula errors by leveraging a library of geometry functions. Additionally, RAG-GeoCoder, a retrieval-augmented variant, employs a non-parametric memory module to retrieve tasks as needed, further improving accuracy by lowering reliance on the model’s memory. This code-finetuning framework significantly boosts VLMs’ geometric reasoning, achieving over a 16% performance gain on the GeomVerse dataset compared to other fine-tuning techniques.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.