Use Amazon SageMaker Studio with a custom file system in Amazon EFS

Amazon SageMaker Studio is the latest web-based experience for running end-to-end machine learning (ML) workflows. SageMaker Studio offers a suite of integrated development environments (IDEs), which includes JupyterLab, Code Editor, as well as RStudio. Data scientists and ML engineers can spin up SageMaker Studio private and shared spaces, which are used to manage the storage and resource needs of the JupyterLab and Code Editor applications, enable stopping the applications when not in use to save on compute costs, and resume the work from where they stopped.

The storage resources for SageMaker Studio spaces are Amazon Elastic Block Store (Amazon EBS) volumes, which offer low-latency access to user data like notebooks, sample data, or Python/Conda virtual environments. However, there are several scenarios where using a distributed file system shared across private JupyterLab and Code Editor spaces is convenient, which is enabled by configuring an Amazon Elastic File System (Amazon EFS) file system in SageMaker Studio. Amazon EFS provides a scalable fully managed elastic NFS file system for AWS compute instances.

Amazon SageMaker supports automatically mounting a folder in an EFS volume for each user in a domain. Using this folder, users can share data between their own private spaces. However, users can’t share data with other users in the domain; they only have access to their own folder user-default-efs in the $HOME directory of the SageMaker Studio application.

In this post, we explore three distinct scenarios that demonstrate the versatility of integrating custom Amazon EFS with SageMaker Studio.

For further information on configuring Amazon EFS in SageMaker Studio, refer to Attaching a custom file system to a domain or user profile.

Solution overview

In the first scenario, an AWS infrastructure admin wants to set up an EFS file system that can be shared across the private spaces of a given user profile in SageMaker Studio. This means that each user within the domain will have their own private space on the EFS file system, allowing them to store and access their own data and files. The automation described in this post will enable new team members joining the data science team can quickly set up their private space on the EFS file system and access the necessary resources to start contributing to the ongoing project.

The following diagram illustrates this architecture.

This scenario offers the following benefits:

- Individual data storage and analysis – Users can store their personal datasets, models, and other files in their private spaces, allowing them to work on their own projects independently. Segregation is made by their user profile.

- Centralized data management – The administrator can manage the EFS file system centrally, maintaining data security, backup, and direct access for all users. By setting up an EFS file system with a private space, users can effortlessly track and maintain their work.

- Cross-instance file sharing – Users can access their files from multiple SageMaker Studio spaces, because the EFS file system provides a persistent storage solution.

The second scenario is related to the creation of a single EFS directory that is shared across all the spaces of a given SageMaker Studio domain. This means that all users within the domain can access and use the same shared directory on the EFS file system, allowing for better collaboration and centralized data management (for example, to share common artifacts). This is a more generic use case, because there is no specific segregated folder for each user profile.

The following diagram illustrates this architecture.

This scenario offers the following benefits:

- Shared project directories – Suppose the data science team is working on a large-scale project that requires collaboration among multiple team members. By setting up a shared EFS directory at project level, the team can collaborate on the same projects by accessing and working on files in the shared directory. The data science team can, for example, use the shared EFS directory to store their Jupyter notebooks, analysis scripts, and other project-related files.

- Simplified file management – Users don’t need to manage their own private file storage, because they can rely on the shared directory for their file-related needs.

- Improved data governance and security – The shared EFS directory, being centrally managed by the AWS infrastructure admin, can provide improved data governance and security. The admin can implement access controls and other data management policies to maintain the integrity and security of the shared resources.

The third scenario explores the configuration of an EFS file system that can be shared across multiple SageMaker Studio domains within the same VPC. This allows users from different domains to access and work with the same set of files and data, enabling cross-domain collaboration and centralized data management.

The following diagram illustrates this architecture.

This scenario offers the following benefits:

- Enterprise-level data science collaboration – Imagine a large organization with multiple data science teams working on various projects across different departments or business units. By setting up a shared EFS file system accessible across the organization’s SageMaker Studio domains, these teams can collaborate on cross-functional projects, share artifacts, and use a centralized data repository for their work.

- Shared infrastructure and resources – The EFS file system can be used as a shared resource across multiple SageMaker Studio domains, promoting efficiency and cost-effectiveness.

- Scalable data storage – As the number of users or domains increases, the EFS file system automatically scales to accommodate the growing storage and access requirements.

- Data governance – The shared EFS file system, being managed centrally, can be subject to stricter data governance policies, access controls, and compliance requirements. This can help the organization meet regulatory and security standards while still enabling cross-domain collaboration and data sharing.

Prerequisites

This post provides an AWS CloudFormation template to deploy the main resources for the solution. In addition to this, the solution expects that the AWS account in which the template is deployed already has the following configuration and resources:

Refer to Attaching a custom file system to a domain or user profile for additional prerequisites.

Configure an EFS directory shared across private spaces of a given user profile

In this scenario, an administrator wants to provision an EFS file system for all users of a SageMaker Studio domain, creating a private file system directory for each user. We can distinguish two use cases:

- Create new SageMaker Studio user profiles – A new team member joins a preexisting SageMaker Studio domain and wants to attach a custom EFS file system to the JupyterLab or Code Editor spaces

- Use preexisting SageMaker Studio user profiles – A team member is already working on a specific SageMaker Studio domain and wants to attach a custom EFS file system to the JupyterLab or Code Editor spaces

The solution provided in this post focuses on the first use case. We discuss how to adapt the solution for preexisting SageMaker Studio domain user profiles later in this post.

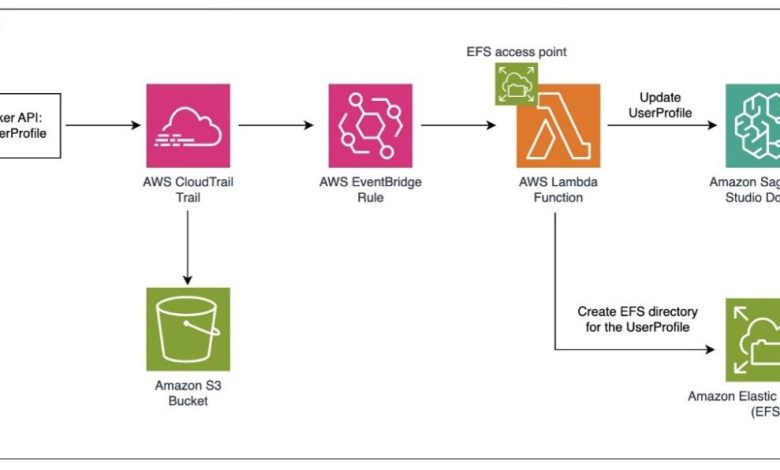

The following diagram illustrates the high-level architecture of the solution.

In this solution, we use CloudTrail, Amazon EventBridge, and Lambda to automatically create a private EFS directory when a new SageMaker Studio user profile is created. The high-level steps to set up this architecture are as follows:

- Create an EventBridge rule that invokes the Lambda function when a new SageMaker user profile is created and logged in CloudTrail.

- Create an EFS file system with an access point for the Lambda function and with a mount target in every Availability Zone that the SageMaker Studio domain is located.

- Use a Lambda function to create a private EFS directory with the required POSIX permissions for the profile. The function will also update the profile with the new file system configuration.

Deploy the solution using AWS CloudFormation

To use the solution, you can deploy the infrastructure using the following CloudFormation template. This template deploys three main resources in your account: Amazon EFS resources (file system, access points, mount targets), an EventBridge rule, and a Lambda function.

Refer to Create a stack from the CloudFormation console for additional information. The input parameters for this template are:

- SageMakerDomainId – The SageMaker Studio domain ID that will be associated with the EFS file system.

- SageMakerStudioVpc – The VPC associated to the SageMaker Studio domain.

- SageMakerStudioSubnetId – One or multiple subnets associated to the SageMaker Studio domain. The template deploys its resources in these subnets.

- SageMakerStudioSecurityGroupId – The security group associated to the SageMaker Studio domain. The template configures the Lambda function with this security group.

Amazon EFS resources

After you deploy the template, navigate to the Amazon EFS console and confirm that the EFS file system has been created. The file system has a mount target in every Availability Zone that your SageMaker domain connects to.

Note that each mount target uses the EC2 security group that SageMaker created in your AWS account when you first created the domain, which allows NFS traffic at port 2049. The provided template automatically retrieves this security group when it is first deployed, using a Lambda backed custom resource.

You can also observe that the file system has an EFS access point. This access point grants root access on the file system for the Lambda function that will create the directories for the SageMaker Studio user profiles.

EventBridge rule

The second main resource is an EventBridge rule invoked when a new SageMaker Studio user profile is created. Its target is the Lambda function that creates the folder in the EFS file system and updates the profile that has been just created. The input of the Lambda function is the event matched, where you can get the SageMaker Studio domain ID and the SageMaker user profile name.

Lambda function

Lastly, the template creates a Lambda function that creates a directory in the EFS file system with the required POSIX permissions for the user profile and updates the user profile with the new file system configuration.

At a POSIX permissions level, you can control which users can access the file system and which files or data they can access. The POSIX user and group ID for SageMaker apps are:

UID– The POSIX user ID. The default is 200001. A valid range is a minimum value of 10000 and maximum value of 4000000.GID– The POSIX group ID. The default is 1001. A valid range is a minimum value of 1001 and maximum value of 4000000.

The Lambda function is in the same VPC as the EFS file system and it has attached the file system and access point previously created.

Adapt the solution for preexisting SageMaker Studio domain user profiles

We can reuse the previous solution for scenarios in which the domain already has user profiles created. For that, you can create an additional Lambda function in Python that lists all the user profiles for the given SageMaker Studio domain and creates a dedicated EFS directory for each user profile.

The Lambda function should be in the same VPC as the EFS file system and it has attached the file system and access point previously created. You need to add the efs_id and domain_id values as environment variables for the function.

You can include the following code as part of this new Lambda function and run it manually:

Configure an EFS directory shared across all spaces of a given domain

In this scenario, an administrator wants to provision an EFS file system for all users of a SageMaker Studio domain, using the same file system directory for all the users.

To achieve this, in addition to the prerequisites described earlier in this post, you need to complete the following steps.

Create the EFS file system

The file system needs to be in the same VPC as the SageMaker Studio domain. Refer to Creating EFS file systems for additional information.

Add mount targets to the EFS file system

Before SageMaker Studio can access the new EFS file system, the file system must have a mount target in each of the subnets associated with the domain. For more information about assigning mount targets to subnets, see Managing mount targets. You can get the subnets associated to the domain on the SageMaker Studio console under Network. You need to create a mount target for each subnet.

Additionally, for each mount target, you must add the security group that SageMaker created in your AWS account when you created the SageMaker Studio domain. The security group name has the format security-group-for-inbound-nfs-domain-id.

The following screenshot shows an example of an EFS file system with two mount targets for a SageMaker Studio domain associated to two subnets. Note the security group associated to both mount targets.

Create an EFS access point

The Lambda function accesses the EFS file system as root using this access point. See Creating access points for additional information.

Create a new Lambda function

Define a new Lambda function with the name LambdaManageEFSUsers. This function updates the default space settings of the SageMaker Studio domain, configuring the file system settings to use a specific EFS file system shared repository path. This configuration is automatically applied to all spaces within the domain.

The Lambda function is in the same VPC as the EFS file system and it has attached the file system and access point previously created. Additionally, you need to add efs_id and domain_id as environment variables for the function.

At a POSIX permissions level, you can control which users can access the file system and which files or data they can access. The POSIX user and group ID for SageMaker apps are:

UID– The POSIX user ID. The default is 200001.GID– The POSIX group ID. The default is 1001.

The function updates the default space settings of the SageMaker Studio domain, configuring the EFS file system to be used by all users. See the following code:

The execution role of the Lambda function needs to have permissions to update the SageMaker Studio domain:

Configure an EFS directory shared across multiple domains under the same VPC

In this scenario, an administrator wants to provision an EFS file system for all users of multiple SageMaker Studio domains, using the same file system directory for all the users. The idea in this case is to assign the same EFS file system to all users of all domains that are within the same VPC. To test the solution, the account should ideally have two SageMaker Studio domains inside the VPC and subnet.

Create the EFS file system, add mount targets, and create an access point

Complete the steps in the previous section to set up your file system, mount targets, and access point.

Create a new Lambda function

Define a Lambda function called LambdaManageEFSUsers. This function is responsible for automating the configuration of SageMaker Studio domains to use a shared EFS file system within a specific VPC. This can be useful for organizations that want to provide a centralized storage solution for their ML projects across multiple SageMaker Studio domains. See the following code:

The execution role of the Lambda function needs to have permissions to describe and update the SageMaker Studio domain:

Clean up

To clean up the solution you implemented and avoid further costs, delete the CloudFormation template you deployed in your AWS account. When you delete the template, you also delete the EFS file system and its storage. For additional information, refer to Delete a stack from the CloudFormation console.

Conclusion

In this post, we have explored three scenarios demonstrating the versatility of integrating Amazon EFS with SageMaker Studio. These scenarios highlight how Amazon EFS can provide a scalable, secure, and collaborative data storage solution for data science teams.

The first scenario focused on configuring an EFS directory with private spaces for individual user profiles, allowing users to store and access their own data while the administrator manages the EFS file system centrally.

The second scenario showcased a shared EFS directory across all spaces within a SageMaker Studio domain, enabling better collaboration and centralized data management.

The third scenario explored an EFS file system shared across multiple SageMaker Studio domains, empowering enterprise-level data science collaboration and promoting efficient use of shared resources.

By implementing these Amazon EFS integration scenarios, organizations can unlock the full potential of their data science teams, improve data governance, and enhance the overall efficiency of their data-driven initiatives. The integration of Amazon EFS with SageMaker Studio provides a versatile platform for data science teams to thrive in the evolving landscape of ML and AI.

About the Authors

Irene Arroyo Delgado is an AI/ML and GenAI Specialist Solutions Architect at AWS. She focuses on bringing out the potential of generative AI for each use case and productionizing ML workloads, to achieve customers’ desired business outcomes by automating end-to-end ML lifecycles. In her free time, Irene enjoys traveling and hiking.

Irene Arroyo Delgado is an AI/ML and GenAI Specialist Solutions Architect at AWS. She focuses on bringing out the potential of generative AI for each use case and productionizing ML workloads, to achieve customers’ desired business outcomes by automating end-to-end ML lifecycles. In her free time, Irene enjoys traveling and hiking.

Itziar Molina Fernandez is an AI/ML Consultant in the AWS Professional Services team. In her role, she works with customers building large-scale machine learning platforms and generative AI use cases on AWS. In her free time, she enjoys exploring new places.

Itziar Molina Fernandez is an AI/ML Consultant in the AWS Professional Services team. In her role, she works with customers building large-scale machine learning platforms and generative AI use cases on AWS. In her free time, she enjoys exploring new places.

Matteo Amadei is a Data Scientist Consultant in the AWS Professional Services team. He uses his expertise in artificial intelligence and advanced analytics to extract valuable insights and drive meaningful business outcomes for customers. He has worked on a wide range of projects spanning NLP, computer vision, and generative AI. He also has experience with building end-to-end MLOps pipelines to productionize analytical models. In his free time, Matteo enjoys traveling and reading.

Matteo Amadei is a Data Scientist Consultant in the AWS Professional Services team. He uses his expertise in artificial intelligence and advanced analytics to extract valuable insights and drive meaningful business outcomes for customers. He has worked on a wide range of projects spanning NLP, computer vision, and generative AI. He also has experience with building end-to-end MLOps pipelines to productionize analytical models. In his free time, Matteo enjoys traveling and reading.

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years of software engineering and an ML background, he works with customers of any size to understand their business and technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, computer vision, and NLP, involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years of software engineering and an ML background, he works with customers of any size to understand their business and technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, computer vision, and NLP, involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.