Google AI Introduces Gemma-APS: A Collection of Gemma Models for Text-to-Propositions Segmentation

The increasing reliance on machine learning models for processing human language comes with several hurdles, such as accurately understanding complex sentences, segmenting content into comprehensible parts, and capturing the contextual nuances present in multiple domains. In this landscape, the demand for models capable of breaking down intricate pieces of text into manageable, proposition-level components has never been more pronounced. This capability is particularly critical in improving language models used for summarization, information retrieval, and various other NLP tasks.

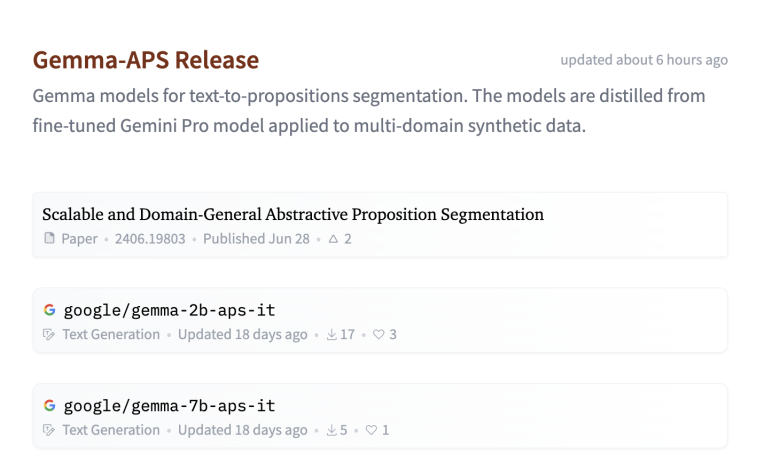

Google AI Releases Gemma-APS, a collection of Gemma models for text-to-propositions segmentation. The models are distilled from fine-tuned Gemini Pro models applied to multi-domain synthetic data, which includes textual data generated to simulate different scenarios and language complexities. This approach of using synthetic data is essential as it allows the models to train on diverse sentence structures and domains, making them adaptable across multiple applications. Gemma-APS models were meticulously designed to convert a continuous text into smaller proposition units, making it more actionable for subsequent NLP tasks, such as sentiment analysis, chatbot applications, or retrieval-augmented generation (RAG). With this release, Google AI is hoping to make text segmentation more accessible, with models optimized to run on varied computational resources.

Technically, Gemma-APS is characterized by its use of distilled models from the Gemini Pro series, which were originally tailored to deliver high performance in multi-domain text analysis. The distillation process involves compressing these powerful models into smaller, more efficient versions without compromising their segmentation quality. These models are now available as Gemma-7B-APS-IT and Gemma-2B-APS-IT on Hugging Face, catering to different needs in terms of computational efficiency and accuracy. The use of multi-domain synthetic data ensures that these models have been exposed to a broad spectrum of language inputs, thereby enhancing their robustness and adaptability. As a result, Gemma-APS models can efficiently handle complex texts, segmenting them into meaningful propositions that encapsulate the underlying information, a feature highly beneficial in improving downstream tasks like summarization, comprehension, and classification.

The importance of Gemma-APS is reflected not only in its versatility but also in its high level of performance across diverse datasets. Google AI has leveraged synthetic data from multiple domains to finetune these models, ensuring that they excel in real-world applications such as technical document parsing, customer service interactions, and knowledge extraction from unstructured texts. Initial evaluations demonstrate that Gemma-APS consistently outperforms previous segmentation models in terms of accuracy and computational efficiency. For instance, it achieves notable improvements in capturing propositional boundaries within complex sentences, enabling subsequent language models to work more effectively. This advancement also reduces the risk of semantic drift during text analysis, which is crucial for applications where retaining the original meaning of each text fragment is critical.

In conclusion, Google AI’s release of Gemma-APS marks a significant milestone in the evolution of text segmentation technologies. By using an effective distillation technique combined with multi-domain synthetic training, these models offer a blend of performance and efficiency that addresses many of the existing limitations in NLP applications. They are poised to be game changers in how language models interpret and break down complex texts, allowing for more effective information retrieval and summarization across multiple domains.

Check out the Models here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Shobha is a data analyst with a proven track record of developing innovative machine-learning solutions that drive business value.