Data Labeling Strategies for Fine-tuning LLMs

Large language models (LLMs) such as GPT-4, Llama, and Gemini are some of the most significant advancements in the field of artificial intelligence (AI), and their ability to understand and generate human language is transforming the way that humans communicate with machines. LLMs are pretrained on vast amounts of text data, enabling them to recognize language structure and semantics, as well as build a broad knowledge base that covers a wide range of topics. This generalized information can be used to drive a range of applications, including virtual assistants, text or code autocompletion, and text summarization; however, many fields require more specialized knowledge and expertise.

A domain-specific language model can be implemented in two ways: building the model from scratch, or fine-tuning a pretrained LLM. Building a model from scratch is a computationally and financially expensive process that requires huge amounts of data, but fine-tuning can be done with smaller datasets. In the fine-tuning process, an LLM undergoes additional training using domain-specific datasets that are curated and labeled by subject matter experts with a deep understanding of the field. While pretraining gives the LLM general knowledge and linguistic capabilities, fine-tuning imparts more specialized skills and expertise.

LLMs can be fine-tuned for most industries or domains; the key requirement is high-quality training data with accurate labeling. Through my experience developing LLMs and machine learning (ML) tools for universities and clients across industries like finance and insurance, I’ve gathered several proven best practices and identified common pitfalls to avoid when labeling data for fine-tuning ML models. Data labeling plays a major role in computer vision (CV) and audio processing, but for this guide, I focus on LLMs and natural language processing (NLP) data labeling, including a walkthrough of how to label data for the fine-tuning of OpenAI’s GPT-4o.

What Are Fine-tuned LLMs?

LLMs are a type of foundation model, which is a general-purpose machine learning model capable of performing a broad range of tasks. Fine-tuned LLMs are models that have received further training, making them more useful for specialized industries and tasks. LLMs are trained on language data and have an exceptional command of syntax, semantics, and context; even though they are extremely versatile, they might underperform with more specialized tasks where domain expertise is required. For these applications, the foundation LLM can be fine-tuned using smaller labeled datasets that focus on specific domains. Fine-tuning leverages supervised learning, a category of machine learning where the model is shown both the input object and the desired output value (the annotations). These prompt-response pairs enable the model to learn the relationship between the input and output so that it can make similar predictions on unseen data.

Fine-tuned LLMs have already proven to be invaluable in streamlining products and services across a number of industries:

- Healthcare: HCA Healthcare, one of the largest hospital networks in the US, uses Google’s MedLM for transcriptions of doctor-patient interactions in emergency rooms and reading electronic health records to identify important points. MedLM is a series of models that are fine-tuned for the healthcare industry. MedLM is based on Med-PaLM 2, the first LLM to reach expert-level performance (85%+) on questions similar to those found on the US Medical Licensing Examination (USMLE).

- Finance: Institutions such as Morgan Stanley, Bank of America, and Goldman Sachs use fine-tuned LLMs to analyze market trends, parse financial documents, and detect fraud. FinGPT, an open-source LLM that aims to democratize financial data, is fine-tuned on financial news and social media posts, making it highly effective at sentiment analysis. FinBERT is another open-source model fine-tuned on financial data, and designed for financial sentiment analysis.

- Legal: While a fine-tuned LLM can’t replace human lawyers, it can help them with legal research and contract analysis. Casetext’s CoCounsel is an AI legal assistant that automates many of the tasks that slow down the legal process, such as analyzing and drafting legal documents. CoCounsel is powered by GPT-4 and fine-tuned with all of the information in Casetext’s legal database.

When compared with foundation LLMs, fine-tuned LLMs show considerable improvements with inputs in their specialized domains—but the quality of the training data is paramount. The fine-tuning data for CoCounsel, for example, was based on approximately 30,000 legal questions refined by a team of lawyers, domain experts, and AI engineers over a period of six months. It was deemed ready for launch only after about 4,000 hours of work. Although CoCounsel has already been released commercially, it continues to be fine-tuned and improved—a key step in keeping any model up to date.

The Data Labeling Process

The annotations required for fine-tuning consist of instruction-expected response pairs, where each input corresponds with an expected output. While selecting and labeling data may seem like a straightforward process, several considerations add to the complexity. The data should be clear and well defined; it must also be relevant, yet cover a comprehensive range of potential interactions. This includes scenarios that may have a high level of ambiguity, such as performing sentiment analysis on product reviews that are sarcastic in nature. In general, the more data a model is trained on, the better; however, when collecting LLM training data, care should be taken to ensure that it is representative of a broad range of contexts and linguistic nuances.

Once the data is collected, it typically requires cleaning and preprocessing to remove noise and inconsistencies. Duplicate records and outliers are removed, and missing values are substituted via imputation. Unintelligible text is also flagged for investigation or removal.

At the annotation stage, data is tagged with the appropriate labels. Human annotators play an essential role in the process, as they provide the insight necessary for accurate labels. To take some of the workload off annotators, many labeling platforms offer AI-assisted prelabeling, an automatic data labeling process that creates the initial labels and identifies important words and phrases.

After the data is labeled, the labels undergo validation and quality assurance (QA), a review for accuracy and consistency. Data points that were labeled by multiple annotators are reviewed to achieve consensus. Automated tools can also be used to validate the data and flag any discrepancies. After the QA process, the labeled data is ready to be used for model training.

Annotation Guidelines and Standards for NLP

One of the most important early steps in the data annotation workflow is creating a clear set of guidelines and standards for human annotators to follow. Guidelines should be easy to understand and consistent in order to avoid introducing any variability that can confuse the model during training.

Text classification, such as labeling the body of an email as spam, is a common data labeling task. The guidelines for text classification should include clear definitions for each potential category, as well as instructions on how to handle text that may not fit into any category.

When labeling text, annotators often perform named entity recognition (NER), identifying and tagging names of people, organizations, locations, and other proper nouns. The guidelines for NER tasks should list all potential entity types with examples on how to handle them. This includes edge cases, such as partial matches or nested entities.

Annotators are often tasked with labeling the sentiment of text as positive, negative, or neutral. With sentiment analysis, each category should be clearly defined. Because sentiments can often be subtle or mixed, examples should be provided to help annotators distinguish between them. The guidelines should also address potential biases related to gender, race, or cultural context.

Coreference resolution refers to the identification of all expressions that refer to the same entity. The guidelines for coreference resolution should provide instructions on how to track and label entities across different sentences and documents, and specify how to handle pronouns.

With part-of-speech (POS) tagging, annotators label each word with a part of speech, for example, noun, adjective, or verb. For POS tagging, the guidelines should include instructions on how to handle ambiguous words or phrases that could fit into multiple categories.

Because LLM data labeling often involves subjective judgment, detailed guidelines on how to handle ambiguity and borderline cases will help annotators produce consistent and correct labels. One example is Universal NER, a project consisting of multilingual datasets with crowdsourced annotations; its annotation guidelines provide detailed information and examples for each entity type, as well as the best ways to handle ambiguity.

Best Practices for NLP and LLM Data Labeling

Due to the potentially subjective nature of text data, there may be challenges in the annotation process. Many of these challenges can be addressed by following a set of data labeling best practices. Before you start, make sure you have a comprehensive understanding of the problem you are solving for. The more information you have, the better able you will be to create a dataset that covers all edge cases and variations. When recruiting annotators, your vetting process should be equally comprehensive. Data labeling is a task that requires reasoning and insight, as well as strong attention to detail. These additional strategies are highly beneficial to the annotation process:

- Iterative refinement: The dataset can be divided into small subsets and labeled in phases. Through feedback and quality checks, the process and guidelines can be improved between phases, with any potential pitfalls identified and corrected early.

- Divide and conquer approach: Complex tasks can be broken up into steps. With sentiment analysis, words or phrases containing sentiment could be identified first, with the overall sentiment of the paragraph determined using rule-based model-assisted automation.

Advanced Techniques for NLP and LLM Data Labeling

There are several advanced techniques that can improve the efficiency, accuracy, and scalability of the labeling process. Many of these techniques take advantage of automation and machine learning models to optimize the workload for human annotators, achieving better results with less manual effort.

The manual labeling workload can be reduced by using active learning algorithms; this is when pretrained ML models identify the data points that would benefit from human annotation. These include data points where the model has the lowest confidence in the predicted label (uncertainty sampling), and borderline cases, where the data points fall closest to the decision boundary between two classes (margin sampling).

NER tasks can be streamlined with gazetteers, which are essentially predefined lists of entities and their corresponding types. Using a gazetteer, the identification of common entities can be automated, freeing up humans to focus on the ambiguous data points.

Longer text passages can be shortened via text summarization. Using an ML model to highlight key sentences or summarize longer passages can reduce the amount of time it takes for human annotators to perform sentiment analysis or text classification.

The training dataset can be expanded with data augmentation. Synthetic data can be automatically generated through paraphrasing, back translation, and replacing words with synonyms. A generative adversarial network (GAN) can also be used to generate data points that mimic a given dataset. These techniques enhance the training dataset, making the resulting model substantially more robust, with minimal additional manual labeling.

Weak supervision is a term that covers a variety of techniques used to train models with noisy, inaccurate, or otherwise incomplete data. One type of weak supervision is distant supervision, where existing labeled data from a related task is used to infer relationships in unlabeled data. For example, a product review labeled with a positive sentiment may contain words like “reliable” and “high quality,” which can be used to help determine the sentiment of an unlabeled review. Lexical resources, like a medical dictionary, can also be used to aid in NER. Weak supervision makes it possible to label large datasets very quickly or when manual labeling is too expensive. This comes at the expense of accuracy, however, and if the highest-quality labels are required, human annotators should be involved.

Finally, with the availability of modern “benchmark” LLMs such as GPT-4, the annotation process can be completely automated with LLM-generated labels, meaning that the response for an instruction-expected response pair is generated by the LLM. For example, a product review could be input into the LLM along with instructions to classify if the sentiment of the review is positive, negative, or neutral, creating a labeled data point that can be used to train another LLM. In many cases, the entire process can be automated, with the instructions also generated by the LLM. Though data labeling with a benchmark LLM can make the process faster, it will not give the fine-tuned model knowledge beyond what the LLM already has. To advance the capabilities of the current generation of ML models, human insight is required.

There are a variety of tools and platforms that make the data labeling workflow more efficient. Smaller, lower-budget projects can take advantage of open-source data labeling software such as Doccano and Label Studio. For larger projects, commercial platforms offer more comprehensive AI-assisted prelabeling; project, team, and QA management tools; dashboards to visualize progress and analytics; and, most importantly, a support team. Some of the more widely used commercial tools include Labelbox, Amazon’s SageMaker Ground Truth, Snorkel Flow, and SuperAnnotate.

Additional tools that can help with data labeling for LLMs include the following:

- Cleanlab uses statistical methods and model analysis to identify and fix issues in datasets, including outliers, duplicates, and label errors. Any issues are highlighted for human review along with suggestions for corrections.

- AugLy is a data augmentation library that supports text, image, audio, and video data. Developed by Meta AI, AugLy provides more than 100 augmentation techniques that can be used to generate synthetic data for model training.

- skweak is an open-source Python library that combines different sources of weak supervision to generate labeled data. It focuses on NLP tasks, and allows users to generate heuristic rules or use pretrained models and distant supervision to perform NER, text classification, and identification of relationships in text.

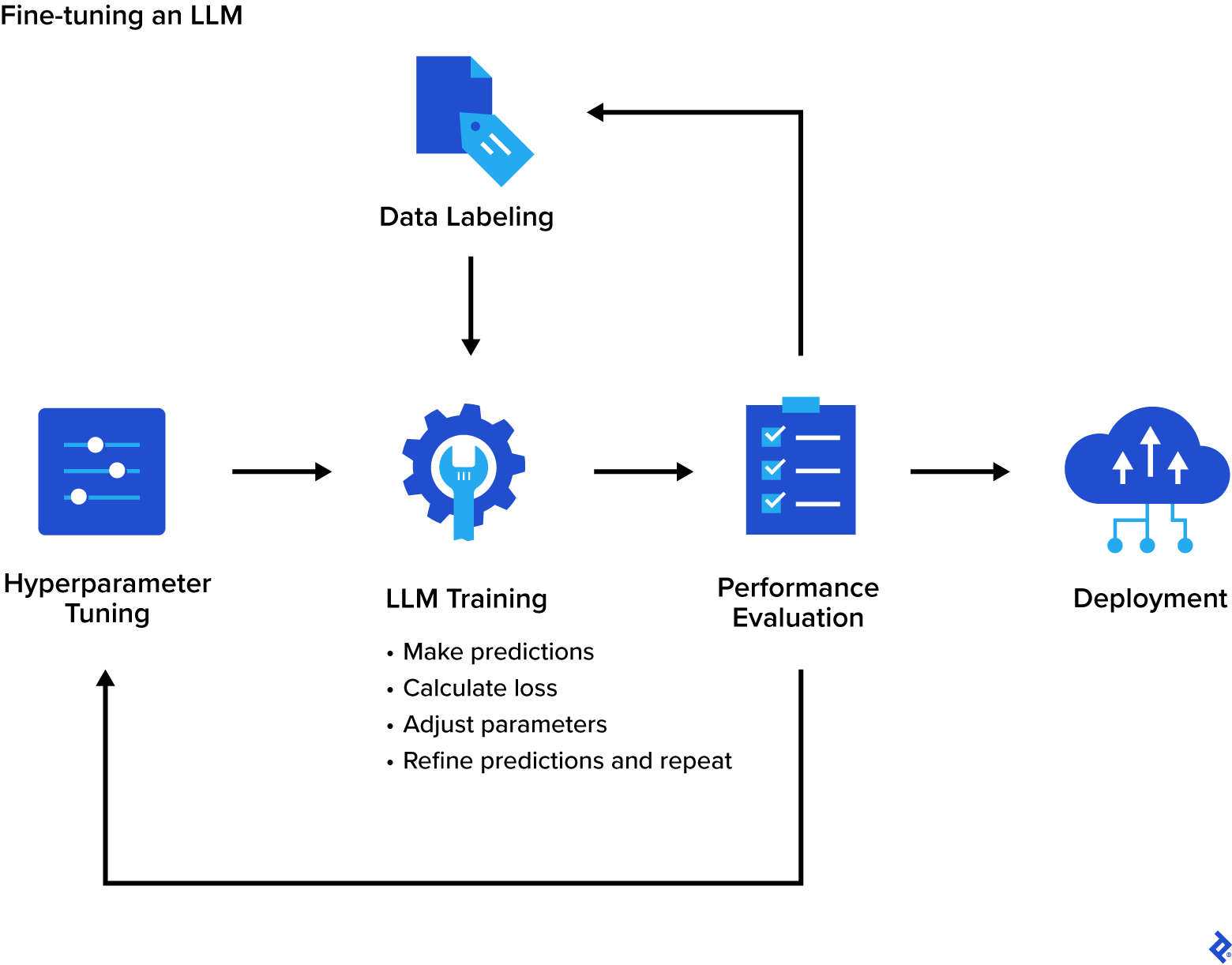

An Overview of the LLM Fine-tuning Process

The first step in the fine-tuning process is selecting the pretrained LLM. There are several sources for pretrained models, including Hugging Face’s Transformers or NLP Cloud, which offer a range of LLMs as well as a platform for training and deployment. Pretrained LLMs can also be obtained from OpenAI, Kaggle, and Google’s TensorFlow Hub.

Training data should generally be large and diverse, covering a wide range of edge cases and ambiguities. A dataset that is too small can lead to overfitting, where the model learns the training dataset too well, and as a result, performs poorly on unseen data. Overfitting can also be caused by training with too many epochs, or complete passes through the dataset. Training data that is not diverse can lead to bias, where the model performs poorly on underrepresented scenarios. Additionally, bias can be introduced by annotators. To minimize bias in the labels, the annotation team should have diverse backgrounds and proper training on how to recognize and reduce their own biases.

Hyperparameter tuning can have a significant impact on the training results. Hyperparameters control how the model learns, and optimizing these settings can prevent undesired outcomes such as overfitting. Some key hyperparameters include the following:

- The learning rate specifies how much the internal parameters (weights and biases) are adjusted at each iteration, essentially determining the speed at which the model learns.

- The batch size specifies the number of training samples used in each iteration.

- The number of epochs specifies how many times the process is run. One epoch is one complete pass through the entire dataset.

Common techniques for hyperparameter tuning include grid search, random search, and Bayesian optimization. Dedicated libraries such as Optuna and Ray Tune are also designed to streamline the hyperparameter tuning process.

Once the data is labeled and has gone through the validation and QA process, the actual fine-tuning of the model can begin. In a typical training algorithm, the model generates predictions on batches of data in a step called the forward pass. The predictions are then compared with the labels, and the loss (a measure of how different the predictions are from the actual values) is calculated. Next, the model performs a backward pass, calculating how much each parameter contributed to the loss. Finally, an optimizer, such as Adam or SGD, is used to adjust the model’s internal parameters in order to improve the predictions. These steps are repeated, enabling the model to refine its predictions iteratively until the overall loss is minimized. This training process is typically performed using tools like Hugging Face’s Transformers, NLP Cloud, or Google Colab. The fine-tuned model can be evaluated against performance metrics such as perplexity, METEOR, BERTScore, and BLEU.

After the fine-tuning process is complete, the model can be deployed into production. There are a variety of options for the deployment of ML models, including NLP Cloud, Hugging Face’s Model Hub, or Amazon’s SageMaker. ML models can also be deployed on premises using frameworks like Flask or FastAPI. Locally deployed models are often used for development and testing, as well as in applications where data privacy and security is a concern.

Additional challenges when fine-tuning an LLM include data leakage and catastrophic interference:

Data leakage occurs when information in the training data also appears in the test data, leading to an overly optimistic assessment of model performance. Maintaining strict separation between training, validation, and test data is effective in reducing data leakage.

Catastrophic interference, or catastrophic forgetting, can occur when a model is trained sequentially on different tasks or datasets. When a model is fine-tuned for a specific task, the new information it learns changes its internal parameters. This change may cause a decrease in performance for more general tasks. Effectively, the model “forgets” some of what it has learned. Research is ongoing on how to prevent catastrophic interference, however, some techniques that can reduce it include elastic weight consolidation (EWC), parameter-efficient fine-tuning (PEFT). and replay-based methods in which old training data is mixed in with the new training data, helping the model to remember previous tasks. Implementing architectures such as progressive neural networks (PNN) can also prevent catastrophic interference.

Fine-tuning GPT-4o With Label Studio

OpenAI currently supports fine-tuning for GPT-3.5 Turbo, GPT-4o, GPT-4o mini, babbage-002, and davinci-002 at its developer platform.

To annotate the training data, we will use the free Community Edition of Label Studio.

First, install Label Studio by running the following command:

pip install label-studio

Label Studio can also be installed using Homebrew, Docker, or from source. Label Studio’s documentation details each of the different methods.

Once installed, start the Label Studio server:

label-studio start

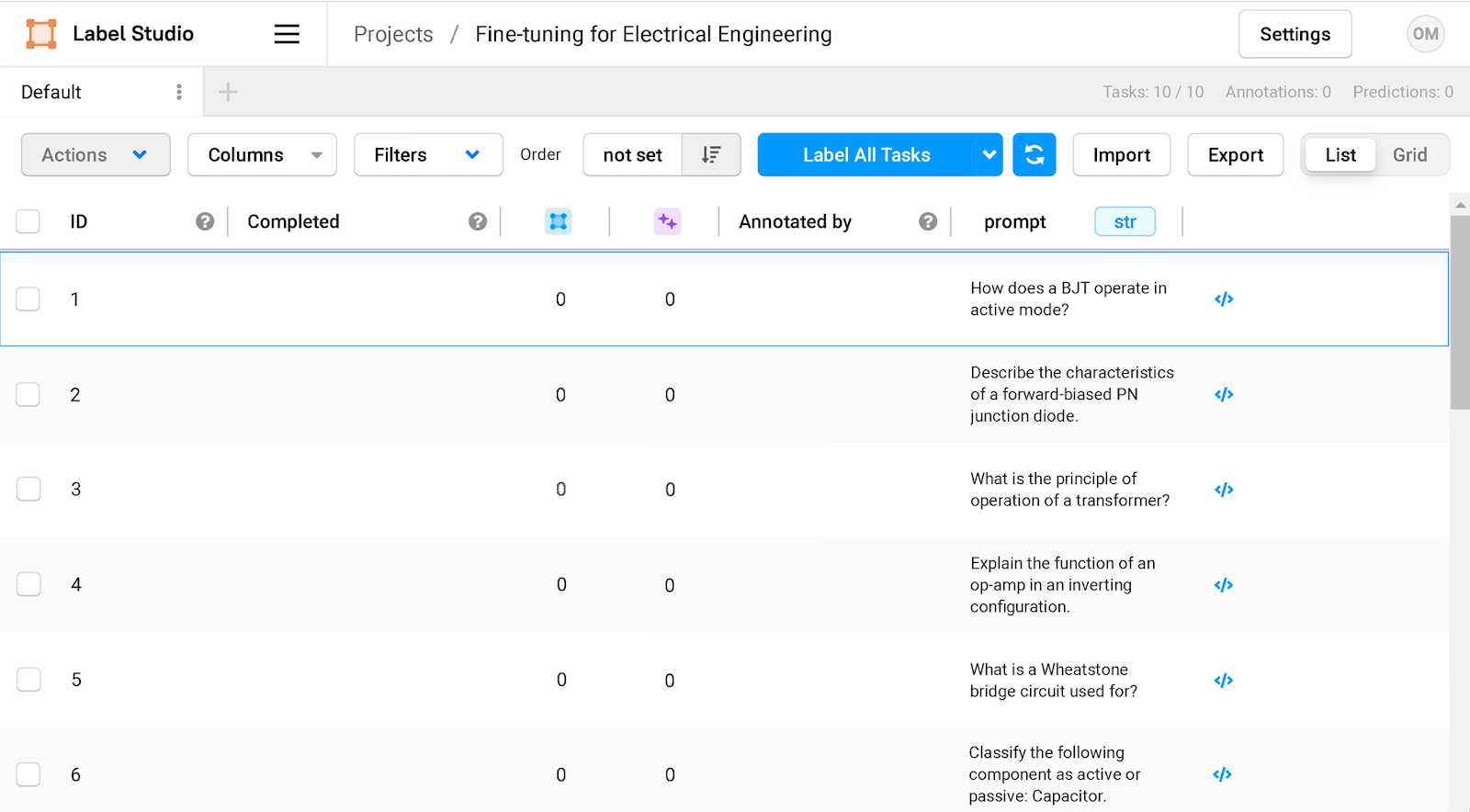

Point your browser to http://localhost:8080 and sign up with an email address and password. Once you have logged in, click the Create button to start a new project. After the new project is created, select the template for fine-tuning by going to Settings > Labeling Interface > Browse Templates > Generative AI > Supervised LLM Fine-tuning.

The initial set of prompts can be imported or added manually. For this fine-tuning project, we will use electrical engineering questions as our prompts:

How does a BJT operate in active mode?

Describe the characteristics of a forward-biased PN junction diode.

What is the principle of operation of a transformer?

Explain the function of an op-amp in an inverting configuration.

What is a Wheatstone bridge circuit used for?

Classify the following component as active or passive: Capacitor.

How do you bias a NE5534 op-amp to Class A operation?

How does a Burr-Brown PCM63 chip convert signals from digital to analog?

What is the history of the Telefunken AC701 tube?

What does a voltage regulator IC do?

The questions appear as a list of tasks in the dashboard.

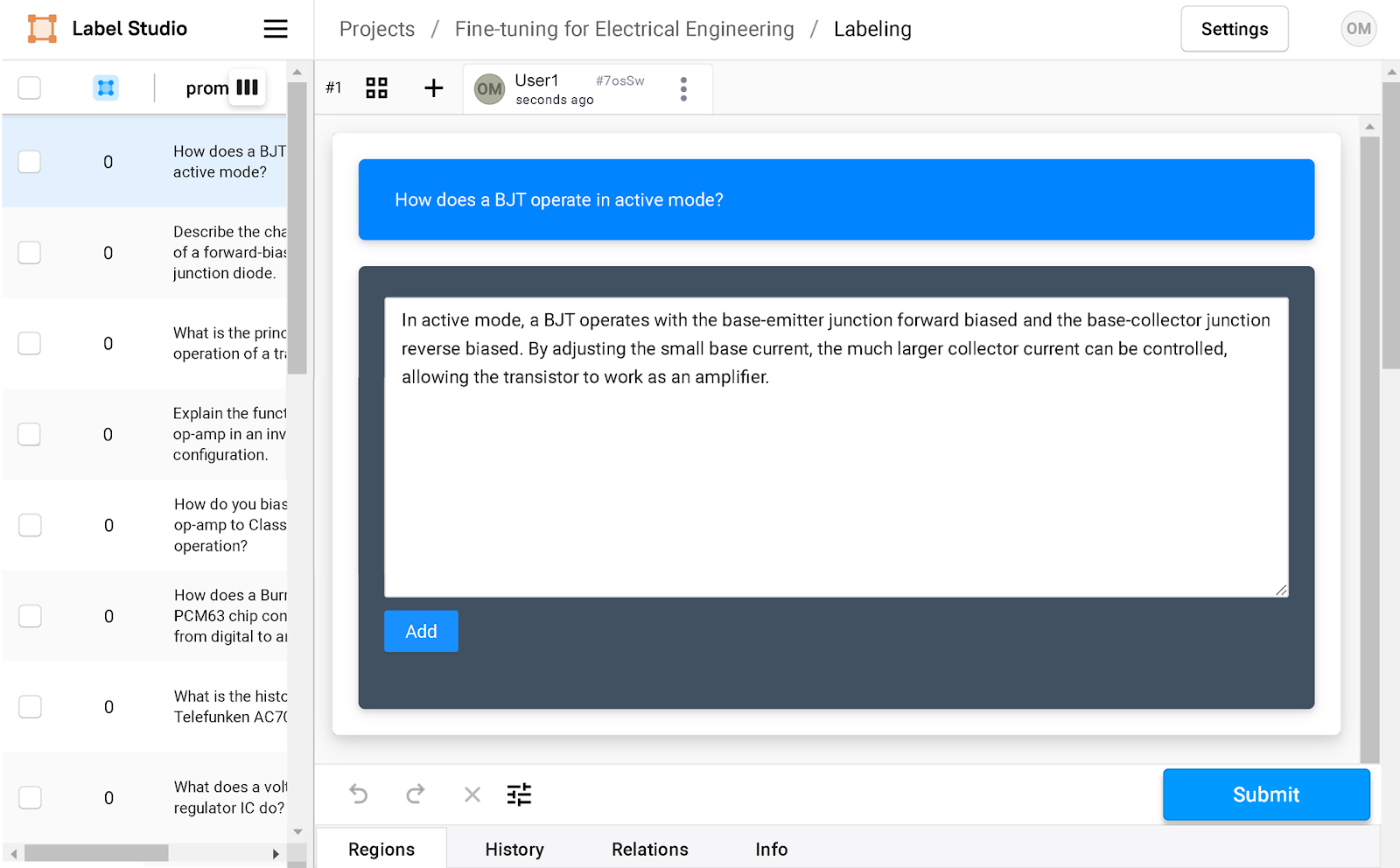

Clicking on each question opens the annotation window, where the expected response can be added.

Once all of the data points are labeled, click the Export button to export your labeled data to a JSON, CSV, or TSV file. In this example, we are exporting to a CSV file. However, to fine-tune GPT-4o, OpenAI requires the format of the training data to be consistent with its Chat Completions API. The data should be structured in JSON Lines (JSONL) format, with each line containing a “message” object. A message object can contain multiple pieces of content, each with its own role, either “system,” “user,” or “assistant”:

System: Content with the system role modifies the behavior of the model. For example, the model can be instructed to adopt a sarcastic personality or write in an action-packed manner. The system role is optional.

User: Content with the user role contains examples of requests or prompts.

Assistant: Content with the assistant role gives the model examples of how it should respond to the request or prompt contained in the corresponding user content.

The following is an example of one message object containing an instruction and expected response:

{"messages":

[

{"role": "user", "content": "How does a BJT operate in active mode?"},

{"role": "assistant", "content": "In active mode, a BJT operates with the base-emitter junction forward biased and the base-collector junction reverse biased. By adjusting the small base current, the much larger collector current can be controlled, allowing the transistor to work as an amplifier."}

]

}

A Python script was created in order to modify the CSV data to have the correct format. The script opens the CSV file that was created by Label Studio and iterates through each row, converting it into the JSONL format:

import pandas as pd #import the Pandas library

import json

df = pd.read_csv("C:/datafiles/engineering-data.csv") #engineering-data.csv is the csv file that LabelStudio exported

#the file will be formatted in the JSONL format

with open("C:/datafiles/finetune.jsonl", "w") as data_file:

for _, row in df.iterrows():

instruction = row["instruction"]

prompt = row["prompt"]

data_file.write(json.dumps(

{"messages": [

{"role": "user" , "content": prompt},

{"role": "assistant" , "content": instruction}

]}))

data_file.write("\n")

Once the data is ready, it can be used for fine-tuning at platform.openai.com.

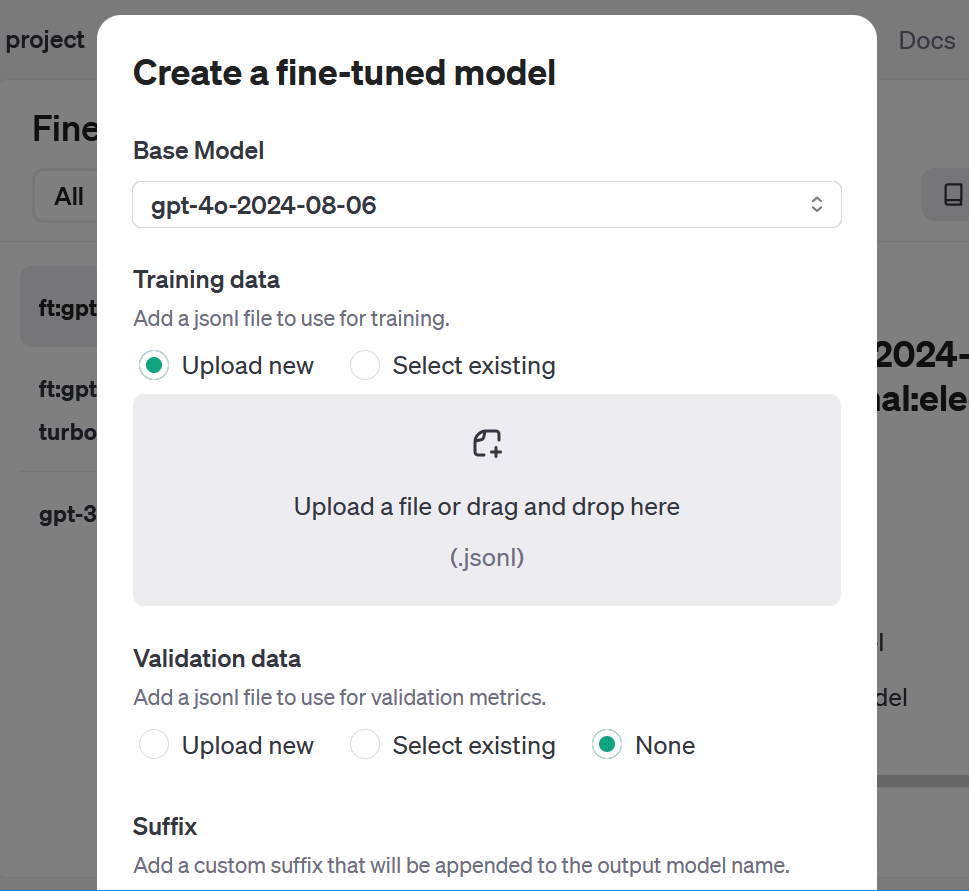

To access the fine-tuning dashboard, click Dashboard at the top and then Fine-tuning on the left navigation menu. Clicking on the Create button brings up an interface that allows you to select the model you want to train, upload the training data, and adjust three hyperparameters: learning rate multiplier, batch size, and number of epochs. The most current model, gpt-4o-2024-08-06 was selected for this test. The hyperparameters were left on their default setting of Auto. OpenAI also lets you add a suffix to help differentiate your fine-tuned models. For this test, the suffix was set to “electricalengineer.”

The fine-tuning process for GPT-4o, including validation of training data and evaluation of the completed model, lasted approximately three hours and resulted in 8,700 trained tokens. In contrast, GPT-4o mini, a smaller and more cost-efficient model, completed the fine-tuning process in just 10 minutes.

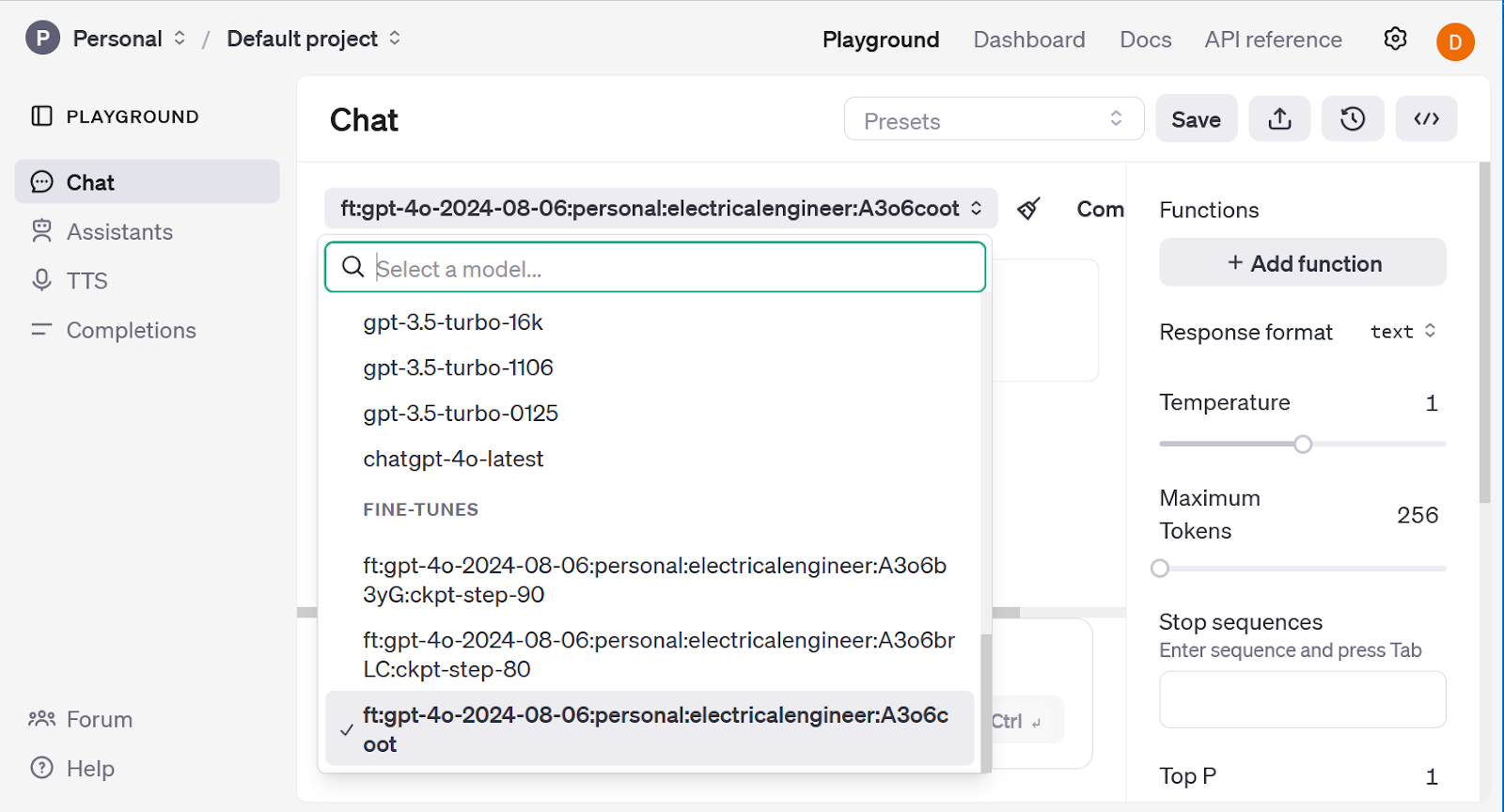

The results can be tested by clicking the Playground link. Clicking on the gray drop-down menu near the top of the page shows you the available models, including the fine-tuned model. Also included are additional models that represent checkpoints during the last three epochs of the training. These models can be used for various purposes, including in cases of overfitting; models at previous checkpoints can be tested to determine when the overfitting occurred.

To test the results of the fine-tuning process, the model was set to gpt-4o and asked an obscure question that it might not have the answer to:

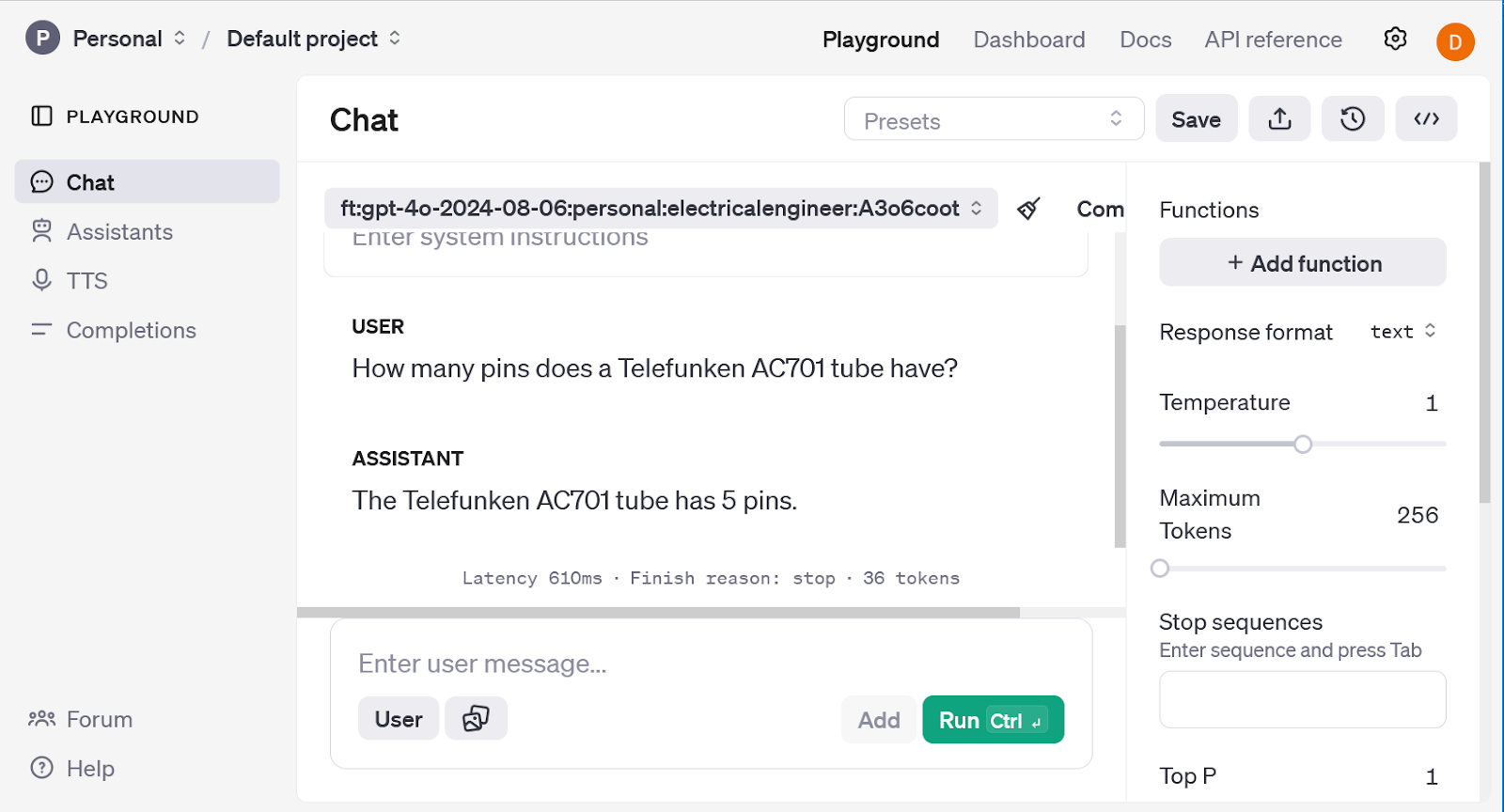

How many pins does a Telefunken AC701 tube have?

The model responded with:

The Telefunken AC701 is a miniature tube often used in vintage microphone designs. It has 8 pins.

While the response is mostly correct, there is one small error. The Telefunken AC701 is a tube that was used in some of the most famous vintage microphones in history, including the Neumann M49, M269, KM54, Schoeps M221B, and Telefunken Ela-M251. However, the AC701 actually has five pins.

The model was set to the fine-tuned model ft:gpt-40-2024-08-06:personal:electricalengineer:A3o6coot, and asked the same question. Because the training data contained information about the AC701 tube, the response from the fine-tuned model was:

The Telefunken AC701 has 5 pins.

For this question, the fine-tuning process was successful and the model was able to learn new information about a vintage vacuum tube.

OpenAI’s fine-tuning platform is easy to use and effective, however, it is limited to OpenAI models. If you want to fine-tune LLMs like Llama and Mistral, there are a variety of tools available, including AutoTrain, Axolotl, LLaMA-Factory, and Unsloth.

The Future of Large Language Models

Fine-tuned LLMs have already shown incredible promise, with models like MedLM and CoCounsel being used professionally in specialized applications every day. An LLM that is tailored to a specific domain is an extremely powerful and useful tool, but only when fine-tuned with relevant and accurate training data. Automated methods, such as using an LLM for data labeling, are capable of streamlining the process, but building and annotating a high-quality training dataset requires human expertise.

As data labeling techniques evolve, the potential of LLMs will continue to grow. Innovations in active learning will increase accuracy and efficiency, as well as accessibility. More diverse and comprehensive datasets will also become available, further improving the data the models are trained on. Additionally, techniques such as retrieval augmented generation (RAG) can be combined with fine-tuned LLMs to generate responses that are more current and reliable.

LLMs are a relatively young technology with plenty of room for growth. By continuing to refine data labeling methodologies, fine-tuned LLMs will become even more capable and versatile, driving innovation across an even wider range of industries.

The technical content presented in this article was reviewed by Necati Demir.