DP-Norm: A Novel AI Algorithm for Highly Privacy-Preserving Decentralized Federated Learning (FL)

Federated Learning (FL) is a successful solution for decentralized model training that prioritizes data privacy, allowing several nodes to learn together without sharing data. It’s especially important in sensitive areas such as medical analysis, industrial anomaly detection, and voice processing.

Recent FL advancements emphasize decentralized network architectures to address challenges posed by non-IID (non-independent and identically distributed) data, which can compromise privacy during model updates. Studies show that even small differences in model parameters may leak confidential information, underscoring the need for effective privacy strategies. Differential privacy (DP) techniques have been integrated into decentralized FL to enhance privacy by adding controlled Gaussian noise to the exchanged information. While these methods can be adapted from single-node training to decentralized settings, their introduction may degrade learning performance due to interferences and the nature of non-IID data allocation.

To overcome these problems, a research team from Japan proposes a primal-dual differential privacy algorithm with denoising normalization, termed DP-Norm. This approach introduces a DP diffusion process into Edge Consensus Learning (ECL) as linear constraints on model variables, enhancing robustness against non-IID data. While addressing noise and interference, the team incorporates a denoising process to mitigate explosive norm increases from dual variable exchanges, ensuring privacy-preserving message passing.

In particular, the approach applies DP diffusion to message forwarding in the ECL framework, with Gaussian noise added to the dual variables to limit information leakage. However, during pre-testing, it was discovered that including this noise caused the learning process to stall due to an increase in the norm of the dual variables. To reduce noise buildup, the cost function incorporates a denoising normalization term ρ(λ). This normalization prevents the norm from expanding rapidly while preserving the privacy benefits of the DP diffusion process. The update rule for DP-Norm is derived using operator splitting techniques, particularly Peaceman-Rachford splitting, and alternates between local updates to the primal and dual variables and privacy-preserving message passing over a graph. This approach ensures that the model variables at each node approach the stationary point more effectively, even with noise and non-IID data issues. Including a denoising process (ρ(λ)) further enhances the algorithm’s stability. Compared to DP-SGD for decentralized FL, DP-Norm with denoising reduces gradient drift caused by non-IID data and excessive noise, leading to improved model convergence. Lastly, the algorithm’s performance is analyzed through privacy and convergence evaluations, where the minimal noise level required for (ε,δ)-DP is determined, and the effects of DP diffusion and denoising on convergence are discussed.

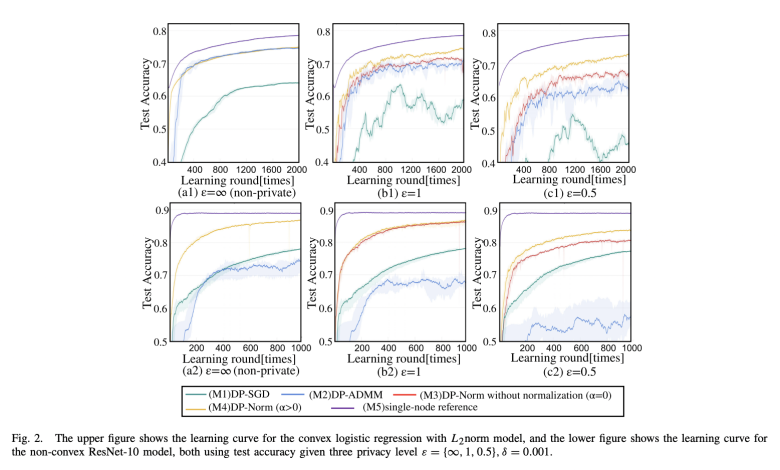

The researchers used the Fashion MNIST dataset to compare the DP-Norm technique against previous approaches (DP-SGD and DP-ADMM) for image classification. Each node had access to non-IID subsets of data, and both convex logistic regression and the non-convex ResNet-10 model were tested. Five approaches, including DP-Norm with and without normalization, have been investigated in various privacy settings (ε={∞,1,0.5}, δ=0.001). DP-Norm (α>0) surpasses other decentralized approaches regarding test accuracy, especially in higher privacy settings. The approach decreases DP diffusion noise by denoising, ensuring steady performance even under higher privacy constraints.

In conclusion, the study presented DP-Norm, a privacy-preserving method for decentralized, federated learning that ensures (ε, δ)-DP. The approach combines message forwarding, local model updates, and denoising normalization. According to the theoretical research, DP-Norm outperforms DP-SGD and DP-ADMM in terms of noise levels and convergence. Experimentally, DP-Norm regularly performed close to single-node reference scores, demonstrating its stability and usefulness in non-IID contexts.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 52k+ ML SubReddit

Mahmoud is a PhD researcher in machine learning. He also holds a

bachelor’s degree in physical science and a master’s degree in

telecommunications and networking systems. His current areas of

research concern computer vision, stock market prediction and deep

learning. He produced several scientific articles about person re-

identification and the study of the robustness and stability of deep

networks.