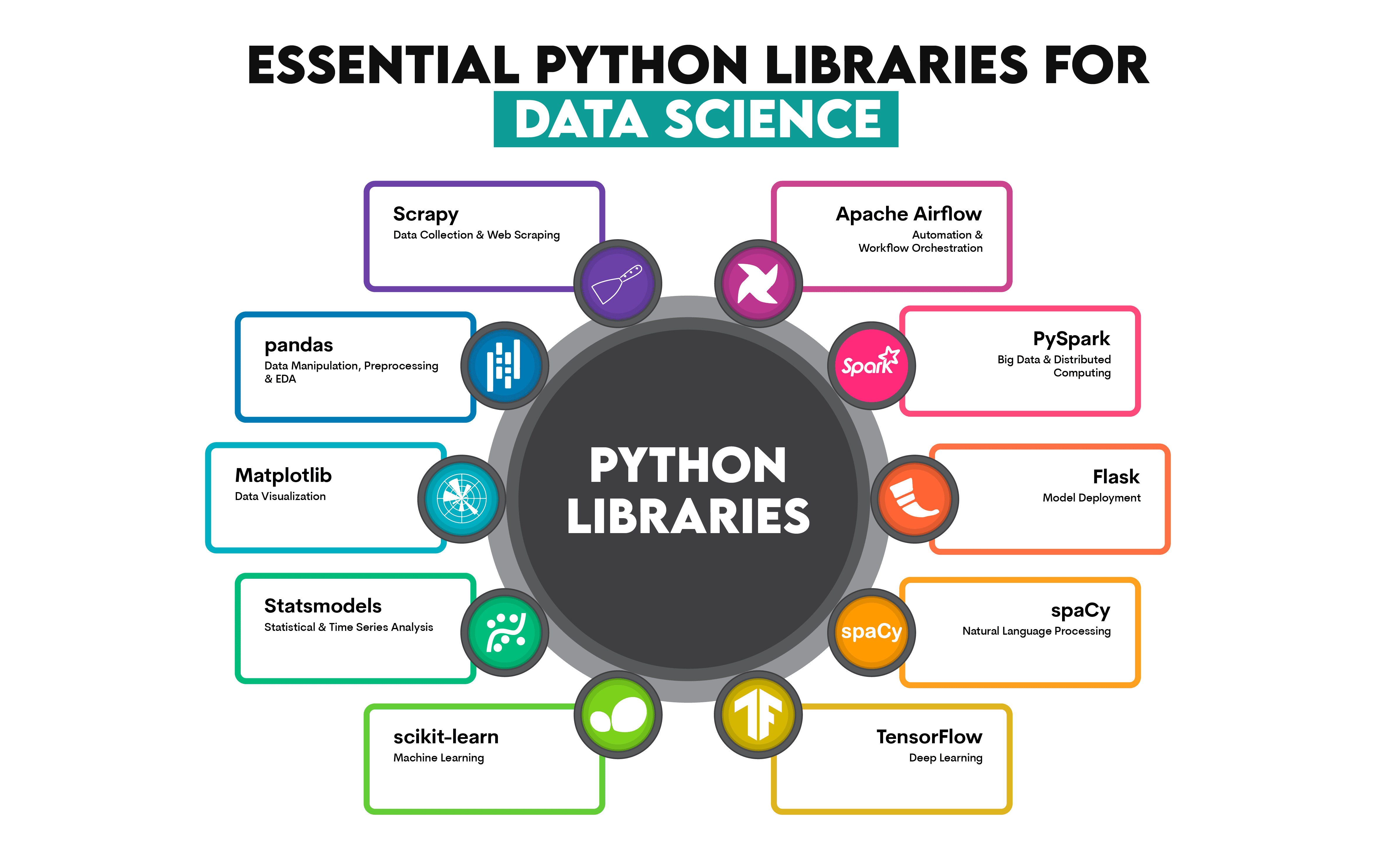

10 Essential Python Libraries for Data Science in 2024

Image by Author

Essential, how do you define that? In the context of Python libraries for data science, I will take the following approach: essential libraries are those that allow you to perform all the typical steps in a data scientist’s job.

No one library can cover them all, so, in most cases, each distinct data science task calls for the use of one specialized library.

Python’s ecosystem is a rich one, which typically means there are many libraries you can use for each task.

Our Top 3 Partner Recommendations

![]()

![]() 1. Best VPN for Engineers – 3 Months Free – Stay secure online with a free trial

1. Best VPN for Engineers – 3 Months Free – Stay secure online with a free trial

![]()

![]() 2. Best Project Management Tool for Tech Teams – Boost team efficiency today

2. Best Project Management Tool for Tech Teams – Boost team efficiency today

![]()

![]() 4. Best Password Management for Tech Teams – zero-trust and zero-knowledge security

4. Best Password Management for Tech Teams – zero-trust and zero-knowledge security

How do you choose the best? Probably by reading an article entitled, oh, I don’t know, ‘10 Essential Python Libraries for Data Science in 2024’ or something like that.

The infographic below shows ten Python libraries I consider the best for essential data science tasks.

1. Data Collection & Web Scraping: Scrapy

Scrapy is a web-crawling Python library essential for data collection and web scraping tasks, known for its scalability and speed. It allows scraping multiple pages and following links.

What Makes It the Best:

- Built-In Support for Asynchronous Requests: Being able to handle multiple requests simultaneously speeds up web scraping.

- Crawler Framework: Following the links and pagination handling are automated, making it perfect for scraping multiple pages.

- Custom Pipelines: Allows processing and cleaning of scraped data before saving it to databases.

Honourable Mentions:

- BeautifulSoup – for smaller scraping tasks

- Selenium – for scraping dynamic content through browser automation

- Requests – for HTTP requests and interaction with APIs.

2. Data Manipulation, Preprocessing & Exploratory Data Analysis (EDA): pandas

Pandas is probably the most famous Python library. It’s designed to make all the aspects of data manipulation very easy, such as filtering, transforming, merging data, statistical calculations, and visualizations.

What Makes It the Best:

- DataFrames: A DataFrame is a table-like data structure that makes data manipulation and analysis very intuitive.

- Handling Missing Data: It has many built-in functions for imputing and filtering data.

- I/O Capabilities: Pandas is very flexible regarding reading from and writing to different file formats, e.g., CSV, Excel, SQL, JSON, etc.

- Descriptive Statistics: Quick statistical summaries of data, such as by using the function describe().

- Data Transformation: It allows using methods such as apply() and groupby()

- Easy Integration With Visualization Libraries: Though it has its own data visualization capabilities, they can be improved by integrating pandas with Matplotlib or seaborn.

Honourable Mentions:

- NumPy – for mathematical computations and dealing with arrays

- Dask – a parallel computing library that can scale pandas or NumPy possibilities to large datasets

- Vaex – for handling out-of-core DataFrames

- Matplotlib – for data visualization

- seaborn – for statistical data visualizations, builds on Matplotlib

- Sweetviz – for automating the EDA reports

3. Data Visualization: Matplotlib

Matplotlib is probably the most versatile data visualization Python library for static visualizations.

What Makes It the Best:

- Customizability: Each element of the visualization – colors, axes, labels, ticks – can be tweaked by the user.

- Rich Choice of Plot Types: You can choose from a vast number of plots, from line and pie charts, histograms, and box plots to heatmaps, treemaps, stemplots, and 3D plots.

- Integrates Well: It integrates well with other libraries, such as seaborn and pandas.

Honourable Mentions:

- seaborn – for more sophisticated visualizations with less coding

- Plotly – for interactive and dynamic visualizations

- Vega-Altair – for statistical and interactive plots using declarative syntax

4. Statistical & Time Series Analysis: Statsmodels

statsmodels is perfect for econometric and statistical tasks, focusing on linear models and time series. It offers statistical models and hypothesis-testing tools you can’t find anywhere else.

What Makes It the Best:

- Comprehensive Statistical Models: A wide range of statistical models at offer includes linear regression, discrete, time series, survival, and multivariate models.

- Hypothesis Testing: Offers various hypothesis tests, such as t-test, chi-square test, z-test, ANOVA, F-test, LR test, Wald test, etc.

- Integration With pandas: It easily integrates with pandas and uses DataFrames for input and output.

Honourable Mentions:

- SciPy – for basic statistical analysis and probability distribution operations

- PyMC – for Bayesian statistical modeling

- Pingouin – for quick hypothesis testing and basic statistics

- Prophet – for time series forecasting

- pandas – for basic time series manipulation

- Darts – for DL time series forecasting

5. Machine Learning: scikit-learn

scikit-learn is a versatile Python library that makes it very easy to implement most ML algorithms used commonly in data science.

What Makes It the Best:

- API: The library’s API is easy to use and provides a consistent interface for implementing all algorithms.

- Model Evaluation: There are many built-in tools for model evaluation, such as cross-validation, grid search, and hyperparameter tuning.

- Choice of Algorithms: It offers a wide range of supervised and unsupervised learning algorithms, probably much more than you need.

Honourable Mentions:

6. Deep Learning: TensorFlow

TensorFlow is a go-to library for building and deploying deep neural networks.

What Makes It the Best:

- End-to-End Workflow: Covers the whole process of modeling, from building the model to deploying it.

- Hardware Acceleration: Optimization for Graphic Processing Units (GPUs) and Tensor Processing Units (TPUs) speeds up common DL tasks, such as large-scale matrix and tensor operations.

- Pre-Trained Models: It offers a huge collection of pre-built models, which can be used for transfer learning.

Honourable Mentions:

7. Natural Language Processing: spaCy

spaCy is a library known for its speed in complex NLP tasks.

What Makes It the Best:

- Efficient and Fast: It is designed for large-scale NLP tasks, and its performance is much faster than that of most alternative libraries.

- Pre-Trained Models: A choice of pre-trained models available in various languages makes model deployment much easier and quicker.

- Customization: You can customize the processing pipeline.

Honourable Mentions:

8. Model Deployment: Flask

Flask is known for its flexibility, speed, and a mild learning curve for model deployment tasks.

What Makes It the Best:

- Lightweight Framework: Requires minimal setup steps with little dependencies, making model deployment quick.

- Modularity: You can choose the tools you need for tasks, such as routing, authentication, and static file serving.

- Scalability: It’s easily scalable by adding services such as Redis, Docker, and Kubernetes.

Honourable Mentions:

9. Big Data & Distributed Computing: PySpark

PySpark is the Python API for Apache Spark. Its ability to effortlessly process big data makes it ideal for processing large datasets in real-time.

What Makes It the Best:

- Distributed Data Processing: In-memory computing and Hadoop Distributed File System (HDFS) allows quick processing of massive datasets.

- Compatible With SQL and MLlib: This allows SQL queries to be used on large-scale data and makes models in MLlib – Spark’s ML library – more scalable.

- Scalability: It scales automatically across clusters, ideal for processing large datasets.

Honourable Mentions:

- Dask – for parallel and distributed computing for pandas-like operations

- Ray – for scaling Python applications for machine learning, reinforcement learning, and distributed training

- Hadoop (via Pydoop) – for distributed file systems and MapReduce jobs

10. Automation & Workflow Orchestration: Apache Airflow

Apache Airflow is a great tool for managing workflows and scheduling data pipeline tasks.

What Makes It the Best:

- DAGs: Directed Acyclic Graph (DAG) allows the creation of complex dependencies and sequences between tasks.

- Task Scheduling: Automatic task scheduling based on time intervals or dependencies.

- Monitoring & Visualization: The library has a web interface for monitoring workflows and visualizing DAGs.

Honourable Mentions:

- Prefect – for simpler and moderately complex tasks

- Luigi – for batch processing jobs

- Dagster – for managing data assets

Conclusion

These ten Python libraries will have you covered for all the tasks that you basically can’t avoid in a data science workflow. In most cases, you won’t need other libraries to complete an end-to-end data science project.

This, of course, doesn’t mean that you are not allowed to learn other libraries to replace or complement the ten I discussed above. However, these libraries are typically the most popular in their domain.

While I’m generally against using popularity as proof of quality, these Python libraries are popular for a reason. This is especially true if you’re new to data science and Python. Start with these libraries, get to know them really well, and, with time, you’ll be able to tell if some other libraries suit you and your work better.

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Nate writes on the latest trends in the career market, gives interview advice, shares data science projects, and covers everything SQL.